NCP

STDIO将多个MCP服务器整合为智能编排器

将多个MCP服务器整合为智能编排器

1 MCP to rule them all

Your MCPs, supercharged. Find any tool instantly, load on demand, run on schedule, ready for any client. Smart loading saves tokens and energy.

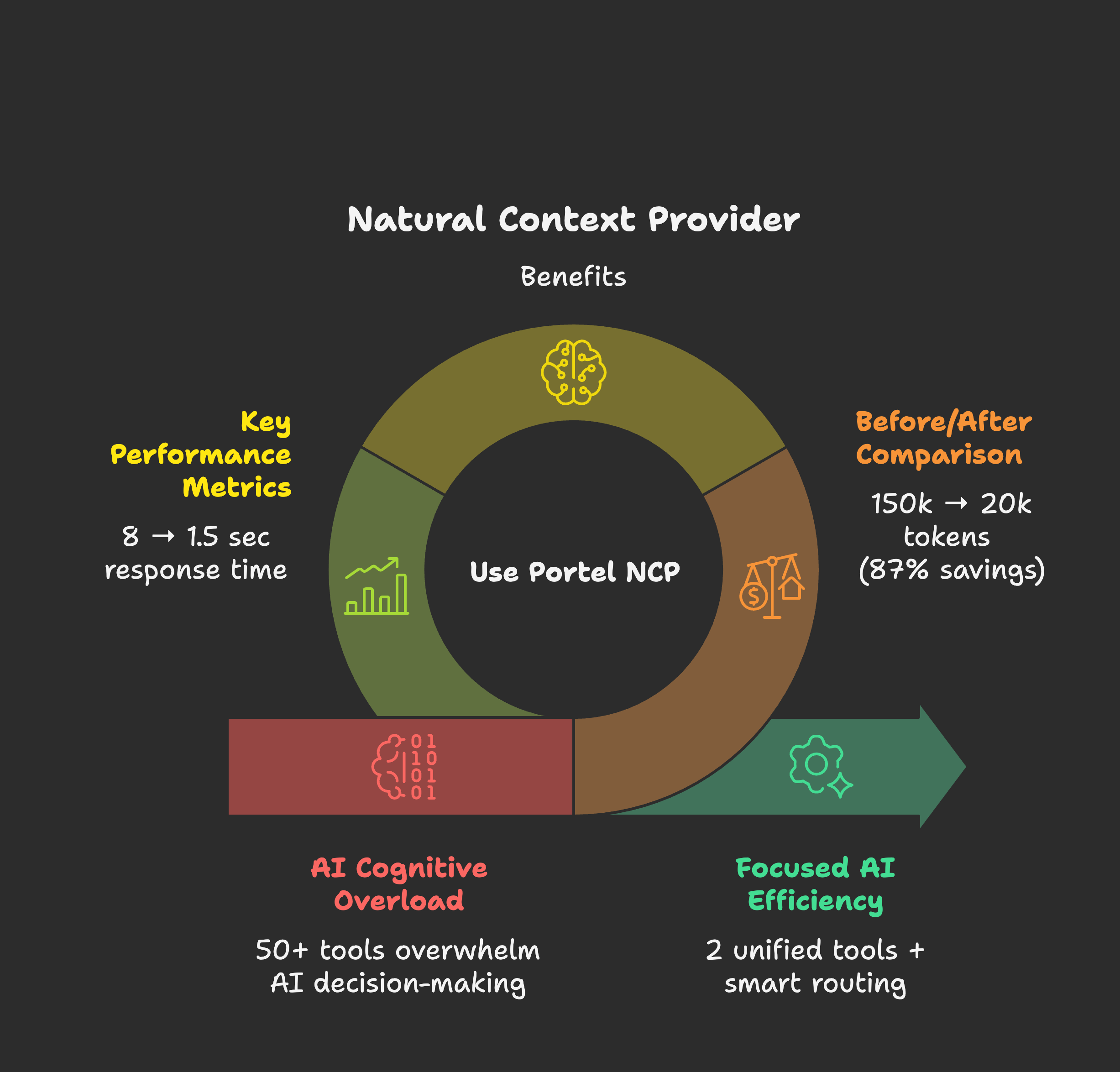

Instead of your AI juggling 50+ tools scattered across different MCPs, NCP gives it a single, unified interface.

Your AI sees just 2 simple tools:

find - "I need to read a file" → finds the right tool automaticallyrun - Execute any tool you discoveredBehind the scenes, NCP manages all 50+ tools: routing requests, discovering the right tool, caching responses, monitoring health.

Why this matters:

🚀 NEW: Project-level configuration - each project can define its own MCPs automatically

What's MCP? The Model Context Protocol by Anthropic lets AI assistants connect to external tools and data sources. Think of MCPs as "plugins" that give your AI superpowers like file access, web search, databases, and more.

You gave your AI assistant 50 tools to be more capable. Instead, you got desperation:

read_file or get_file_content?")Think about it like this:

A child with one toy → Treasures it, masters it, creates endless games with it A child with 50 toys → Can't hold them all, gets overwhelmed, stops playing entirely

Your AI is that child. MCPs are the toys. More isn't always better.

The most creative people thrive with constraints, not infinite options. A poet given "write about anything" faces writer's block. Given "write a haiku about rain"? Instant inspiration.

Your AI is the same. Give it one perfect tool → Instant action. Give it 50 tools → Cognitive overload. NCP provides just-in-time tool discovery so your AI gets exactly what it needs, when it needs it.

When your AI assistant manages 50 tools directly:

🤖 AI Assistant Context:

├── Filesystem MCP (12 tools) ─ 15,000 tokens

├── Database MCP (8 tools) ─── 12,000 tokens

├── Web Search MCP (6 tools) ── 8,000 tokens

├── Email MCP (15 tools) ───── 18,000 tokens

├── Shell MCP (10 tools) ───── 14,000 tokens

├── GitHub MCP (20 tools) ──── 25,000 tokens

└── Slack MCP (9 tools) ────── 11,000 tokens

💀 Total: 80 tools = 103,000 tokens of schemas

What happens:

With NCP as Chief of Staff:

🤖 AI Assistant Context:

└── NCP (2 unified tools) ──── 2,500 tokens

🎯 Behind the scenes: NCP manages all 80 tools

📈 Context saved: 100,500 tokens (97% reduction!)

⚡ Decision time: Sub-second tool selection

🎪 AI behavior: Confident, focused, decisive

Real results from our testing:

| Your MCP Setup | Without NCP | With NCP | Token Savings |

|---|---|---|---|

| Small (5 MCPs, 25 tools) | 15,000 tokens | 8,000 tokens | 47% saved |

| Medium (15 MCPs, 75 tools) | 45,000 tokens | 12,000 tokens | 73% saved |

| Large (30 MCPs, 150 tools) | 90,000 tokens | 15,000 tokens | 83% saved |

| Enterprise (50+ MCPs, 250+ tools) | 150,000 tokens | 20,000 tokens | 87% saved |

Translation:

Choose your MCP client for setup instructions:

| Client | Description | Setup Guide |

|---|---|---|

| Claude Desktop | Anthropic's official desktop app. Best for NCP - one-click .dxt install with auto-sync | → Full Guide |

| Claude Code | Terminal-first AI workflow. Works out of the box! | Built-in support |

| VS Code | GitHub Copilot with Agent Mode. Use NCP for semantic tool discovery | → Setup |

| Cursor | AI-first code editor with Composer. Popular VS Code alternative | → Setup |

| Windsurf | Codeium's AI-native IDE with Cascade. Built on VS Code | → Setup |

| Cline | VS Code extension for AI-assisted development with MCP support | → Setup |

| Continue | VS Code AI assistant with Agent Mode and local LLM support | → Setup |

| Want more clients? | See the full list of MCP-compatible clients and tools | Official MCP Clients • Awesome MCP |

| Other Clients | Any MCP-compatible client via npm | Quick Start ↓ |

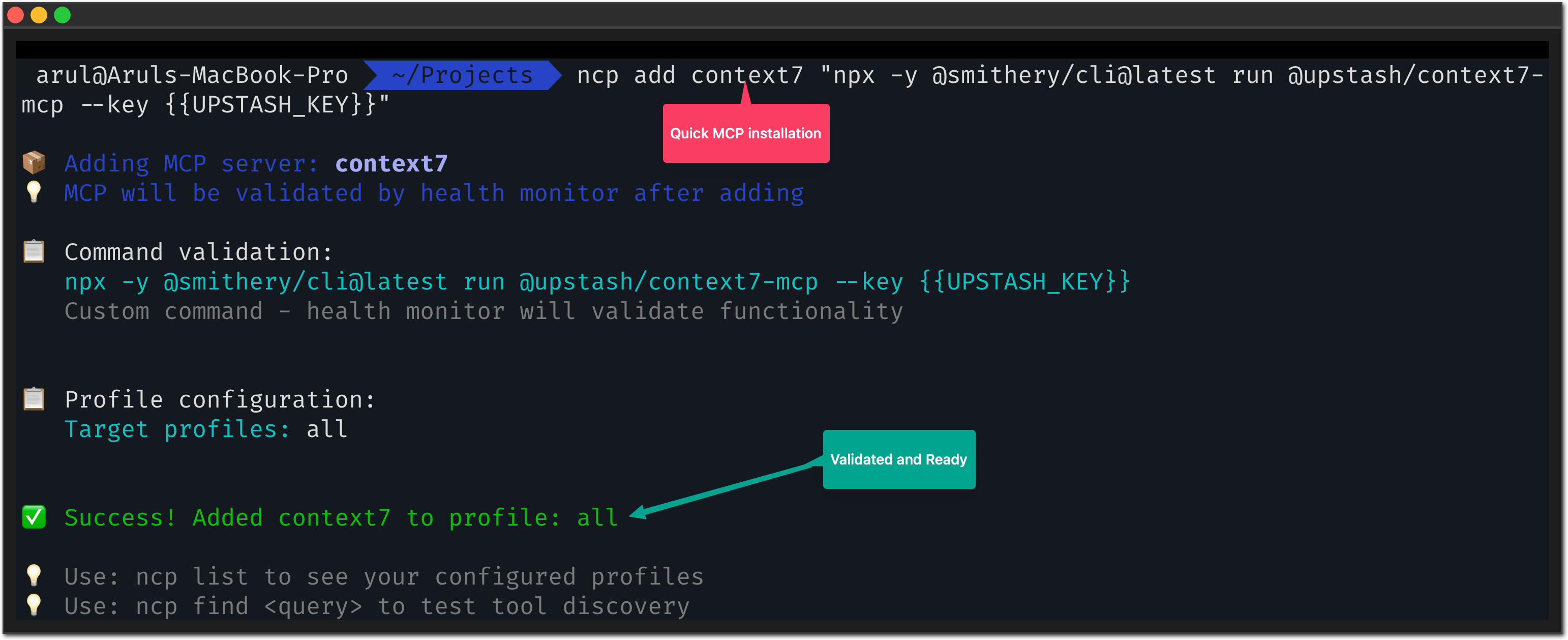

For advanced users or MCP clients not listed above:

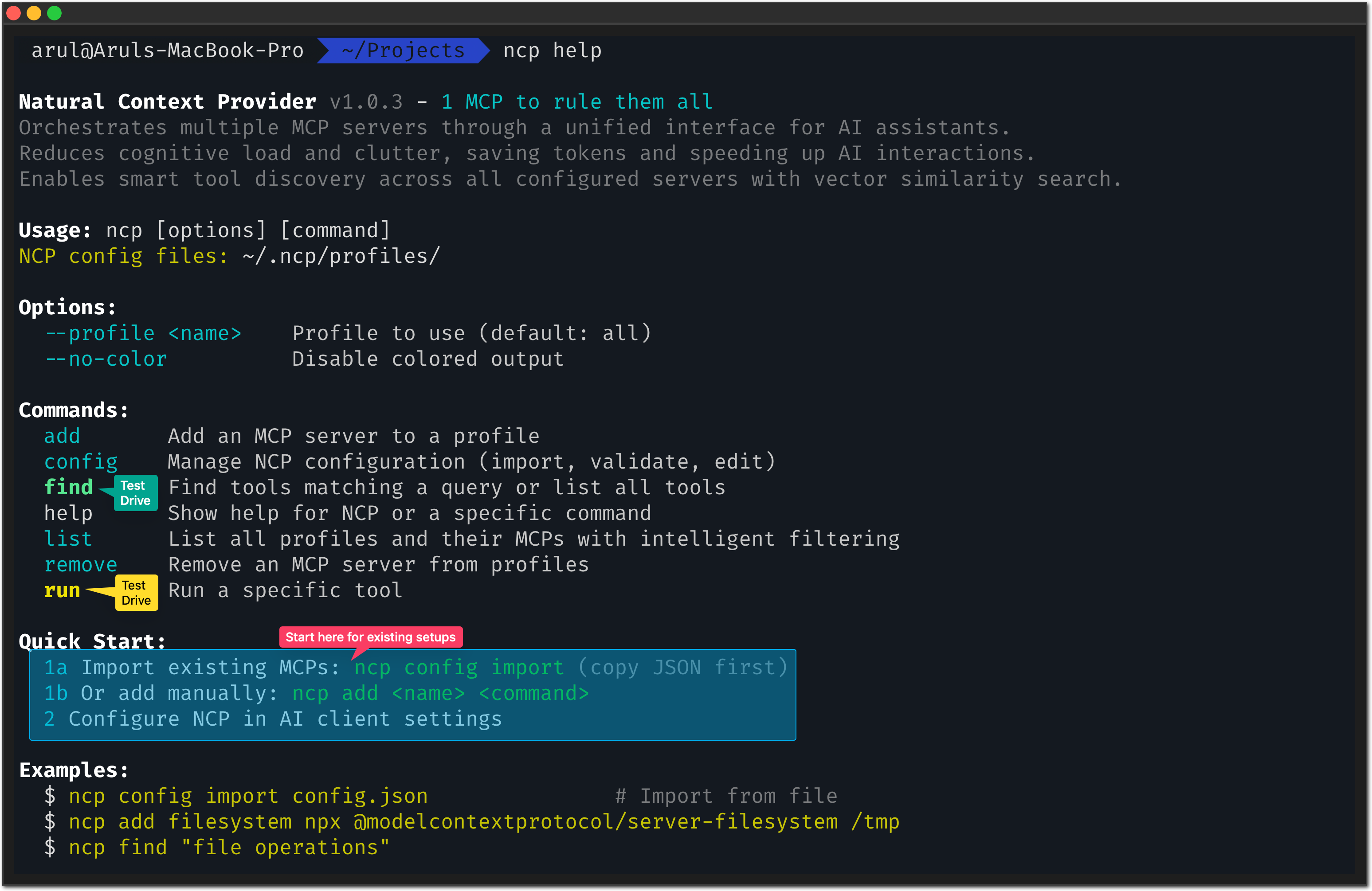

Step 1: Install NCP

npm install -g @portel/ncp

Step 2: Import existing MCPs (optional)

ncp config import # Paste your config JSON when prompted

Step 3: Configure your MCP client

Add to your client's MCP configuration:

{ "mcpServers": { "ncp": { "command": "ncp" } } }

✅ Done! Your AI now sees just 2 tools instead of 50+.

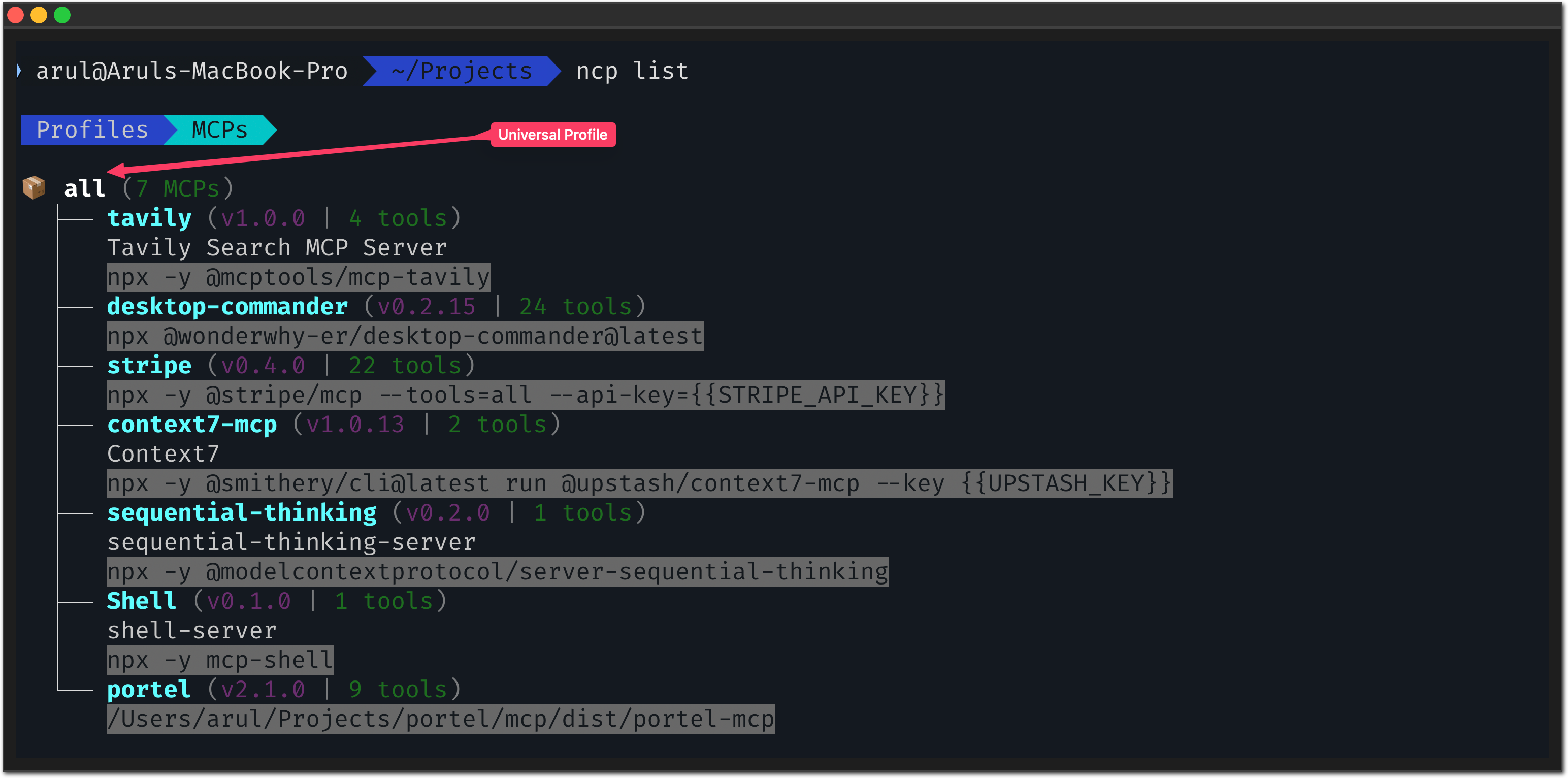

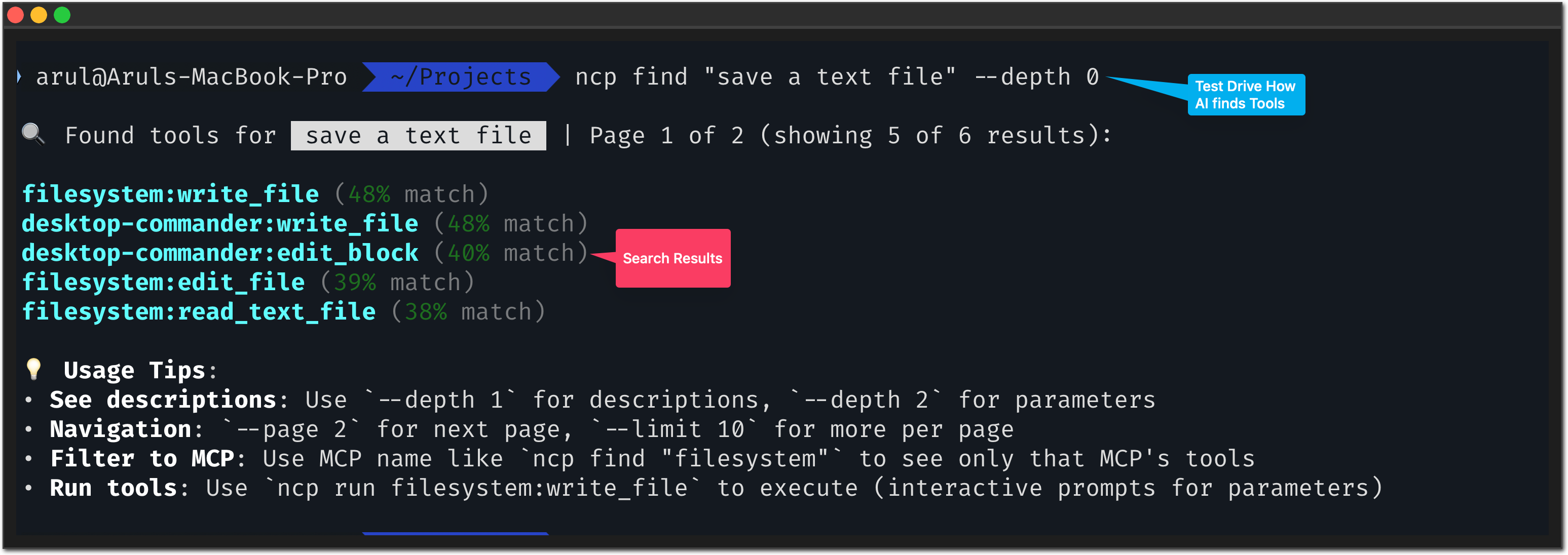

Want to experience what your AI experiences? NCP has a human-friendly CLI:

# Ask like your AI would ask: ncp find "I need to read a file" ncp find "help me send an email" ncp find "search for something online"

Notice: NCP understands intent, not just keywords. Just like your AI needs.

# See your complete MCP ecosystem: ncp list --depth 2 # Get help anytime: ncp --help

# Test any tool safely: ncp run filesystem:read_file --params '{"path": "/tmp/test.txt"}'

Why this matters: You can debug and test tools directly, just like your AI would use them.

# 1. Check NCP is installed correctly ncp --version # 2. Confirm your MCPs are imported ncp list # 3. Test tool discovery ncp find "file" # 4. Test a simple tool (if you have filesystem MCP) ncp run filesystem:read_file --params '{"path": "/tmp/test.txt"}' --dry-run

✅ Success indicators:

ncp list shows your imported MCPsncp find returns relevant toolsBottom line: Your AI goes from desperate assistant to executive assistant.

Here's exactly how NCP empowers your MCPs:

| Feature | What It Does | Why It Matters |

|---|---|---|

| 🔍 Instant Tool Discovery | Semantic search understands intent ("read a file") not just keywords | Your AI finds the RIGHT tool in <1s instead of analyzing 50 schemas |

| 📦 On-Demand Loading | MCPs and tools load only when needed, not at startup | Saves 97% of context tokens - AI starts working immediately |

| ⏰ Automated Scheduling | Run any tool on cron schedules or natural language times | Background automation without keeping AI sessions open |

| 🔌 Universal Compatibility | Works with Claude Desktop, Claude Code, Cursor, VS Code, and any MCP client | One configuration for all your AI tools - no vendor lock-in |

| 💾 Smart Caching | Intelligent caching of tool schemas and responses | Eliminates redundant indexing - energy efficient and fast |

The result: Your MCPs go from scattered tools to a unified, intelligent system that your AI can actually use effectively.

Prefer to build from scratch? Add MCPs manually:

# Add the most popular MCPs: # AI reasoning and memory ncp add sequential-thinking npx @modelcontextprotocol/server-sequential-thinking ncp add memory npx @modelcontextprotocol/server-memory # File and development tools ncp add filesystem npx @modelcontextprotocol/server-filesystem ~/Documents # Path: directory to access ncp add github npx @modelcontextprotocol/server-github # No path needed # Search and productivity ncp add brave-search npx @modelcontextprotocol/server-brave-search # No path needed

💡 Pro tip: Browse Smithery.ai (2,200+ MCPs) or mcp.so to discover tools for your specific needs.

# Community favorites (download counts from Smithery.ai): ncp add sequential-thinking npx @modelcontextprotocol/server-sequential-thinking # 5,550+ downloads ncp add memory npx @modelcontextprotocol/server-memory # 4,200+ downloads ncp add brave-search npx @modelcontextprotocol/server-brave-search # 680+ downloads

# Popular dev tools: ncp add filesystem npx @modelcontextprotocol/server-filesystem ~/code ncp add github npx @modelcontextprotocol/server-github ncp add shell npx @modelcontextprotocol/server-shell

# Enterprise favorites: ncp add gmail npx @mcptools/gmail-mcp ncp add slack npx @modelcontextprotocol/server-slack ncp add google-drive npx @modelcontextprotocol/server-gdrive ncp add postgres npx @modelcontextprotocol/server-postgres ncp add puppeteer npx @hisma/server-puppeteer

NCP includes powerful internal MCPs that extend functionality beyond external tool orchestration:

Schedule any MCP tool to run automatically using cron or natural language schedules.

# Schedule a daily backup check ncp run schedule:create --params '{ "name": "Daily Backup", "schedule": "every day at 2am", "tool": "filesystem:list_directory", "parameters": {"path": "/backups"} }'

Features:

Install and configure MCPs dynamically through natural language.

# AI can discover and install MCPs for you ncp find "install mcp" # Shows: mcp:install, mcp:search, mcp:configure

Features:

Configuration: Internal MCPs are disabled by default. Enable in your profile settings:

{ "settings": { "enable_schedule_mcp": true, "enable_mcp_management": true } }

NCP automatically detects broken MCPs and routes around them:

ncp list --depth 1 # See health status ncp config validate # Check configuration health

🎯 Result: Your AI never gets stuck on broken tools.

Organize MCPs by project or environment:

# Development setup ncp add --profile dev filesystem npx @modelcontextprotocol/server-filesystem ~/dev # Production setup ncp add --profile prod database npx production-db-server # Use specific profile ncp --profile dev find "file tools"

New: Configure MCPs per project with automatic detection - perfect for teams and Cloud IDEs:

# In any project directory, create local MCP configuration: mkdir .ncp ncp add filesystem npx @modelcontextprotocol/server-filesystem ./ ncp add github npx @modelcontextprotocol/server-github # NCP automatically detects and uses project-local configuration ncp find "save file" # Uses only project MCPs

How it works:

.ncp directory exists → Uses project configuration.ncp directory → Falls back to global ~/.ncpall.jsonPerfect for:

.ncp folder with your repo)# Example project structures: frontend-app/ .ncp/profiles/all.json # → playwright, lighthouse, browser-context src/ api-backend/ .ncp/profiles/all.json # → postgres, redis, docker, kubernetes server/

NCP supports both stdio (local) and HTTP/SSE (remote) MCP servers:

Stdio Transport (Traditional):

# Local MCP servers running as processes ncp add filesystem npx @modelcontextprotocol/server-filesystem ~/Documents

HTTP/SSE Transport (Remote):

{ "mcpServers": { "remote-mcp": { "url": "https://mcp.example.com/api", "auth": { "type": "bearer", "token": "your-token-here" } } } }

🔋 Hibernation-Enabled Servers:

NCP automatically supports hibernation-enabled MCP servers (like Cloudflare Durable Objects or Metorial):

How it works:

Perfect for:

Note: Hibernation is a server-side feature. NCP's standard HTTP/SSE client automatically works with both traditional and hibernation-enabled servers without any special configuration.

# From clipboard (any JSON config) ncp config import # From specific file ncp config import "~/my-mcp-config.json" # From Claude Desktop (auto-detected paths) ncp config import

# Check what was imported ncp list # Validate health of imported MCPs ncp config validate # See detailed import logs DEBUG=ncp:* ncp config import

ncp list (should show your MCPs)ncp find "your query"ncp command# Check MCP health (unhealthy MCPs slow everything down) ncp list --depth 1 # Clear cache if needed rm -rf ~/.ncp/cache # Monitor with debug logs DEBUG=ncp:* ncp find "test"

Like Yin and Yang, everything relies on the balance of things.

Compute gives us precision and certainty. AI gives us creativity and probability.

We believe breakthrough products emerge when you combine these forces in the right ratio.

How NCP embodies this balance:

| What NCP Does | AI (Creativity) | Compute (Precision) | The Balance |

|---|---|---|---|

| Tool Discovery | Understands "read a file" semantically | Routes to exact tool deterministically | Natural request → Precise execution |

| Orchestration | Flexible to your intent | Reliable tool execution | Natural flow → Certain outcomes |

| Health Monitoring | Adapts to patterns | Monitors connections, auto-failover | Smart adaptation → Reliable uptime |

Neither pure AI (too unpredictable) nor pure compute (too rigid).

Your AI stays creative. NCP handles the precision.

Want the technical details? Token analysis, architecture diagrams, and performance benchmarks:

Learn about:

Help make NCP even better:

Elastic License 2.0 - Full License

TLDR: Free for all use including commercial. Cannot be offered as a hosted service to third parties.