文档

STDIO为AI编程助手提供最新文档索引和搜索的MCP服务器

为AI编程助手提供最新文档索引和搜索的MCP服务器

AI coding assistants often struggle with outdated documentation and hallucinations. The Docs MCP Server solves this by providing a personal, always-current knowledge base for your AI. It indexes 3rd party documentation from various sources (websites, GitHub, npm, PyPI, local files) and offers powerful, version-aware search tools via the Model Context Protocol (MCP).

This enables your AI agent to access the latest official documentation, dramatically improving the quality and reliability of generated code and integration details. It's free, open-source, runs locally for privacy, and integrates seamlessly into your development workflow.

LLM-assisted coding promises speed and efficiency, but often falls short due to:

Docs MCP Server solves these problems by:

npx.What is semantic chunking?

Semantic chunking splits documentation into meaningful sections based on structure—like headings, code blocks, and tables—rather than arbitrary text size. Docs MCP Server preserves logical boundaries, keeps code and tables intact, and removes navigation clutter from HTML docs. This ensures LLMs receive coherent, context-rich information for more accurate and relevant answers.

Choose your deployment method:

Run a standalone server that includes both MCP endpoints and web interface in a single process. This is the easiest way to get started.

Install Docker.

Start the server:

docker run --rm \ -v docs-mcp-data:/data \ -p 6280:6280 \ ghcr.io/arabold/docs-mcp-server:latest \ --protocol http --host 0.0.0.0 --port 6280

Optional: Add -e OPENAI_API_KEY="your-openai-api-key" to enable vector search for improved results.

Install Node.js 22.x or later.

Start the server:

npx @arabold/docs-mcp-server@latest

This will run the server on port 6280 by default.

Optional: Prefix with OPENAI_API_KEY="your-openai-api-key" to enable vector search for improved results.

Add this to your MCP settings (VS Code, Claude Desktop, etc.):

{ "mcpServers": { "docs-mcp-server": { "type": "sse", "url": "http://localhost:6280/sse", "disabled": false, "autoApprove": [] } } }

Alternative connection types:

// SSE (Server-Sent Events) "type": "sse", "url": "http://localhost:6280/sse" // HTTP (Streamable) "type": "http", "url": "http://localhost:6280/mcp"

Restart your AI assistant after updating the config.

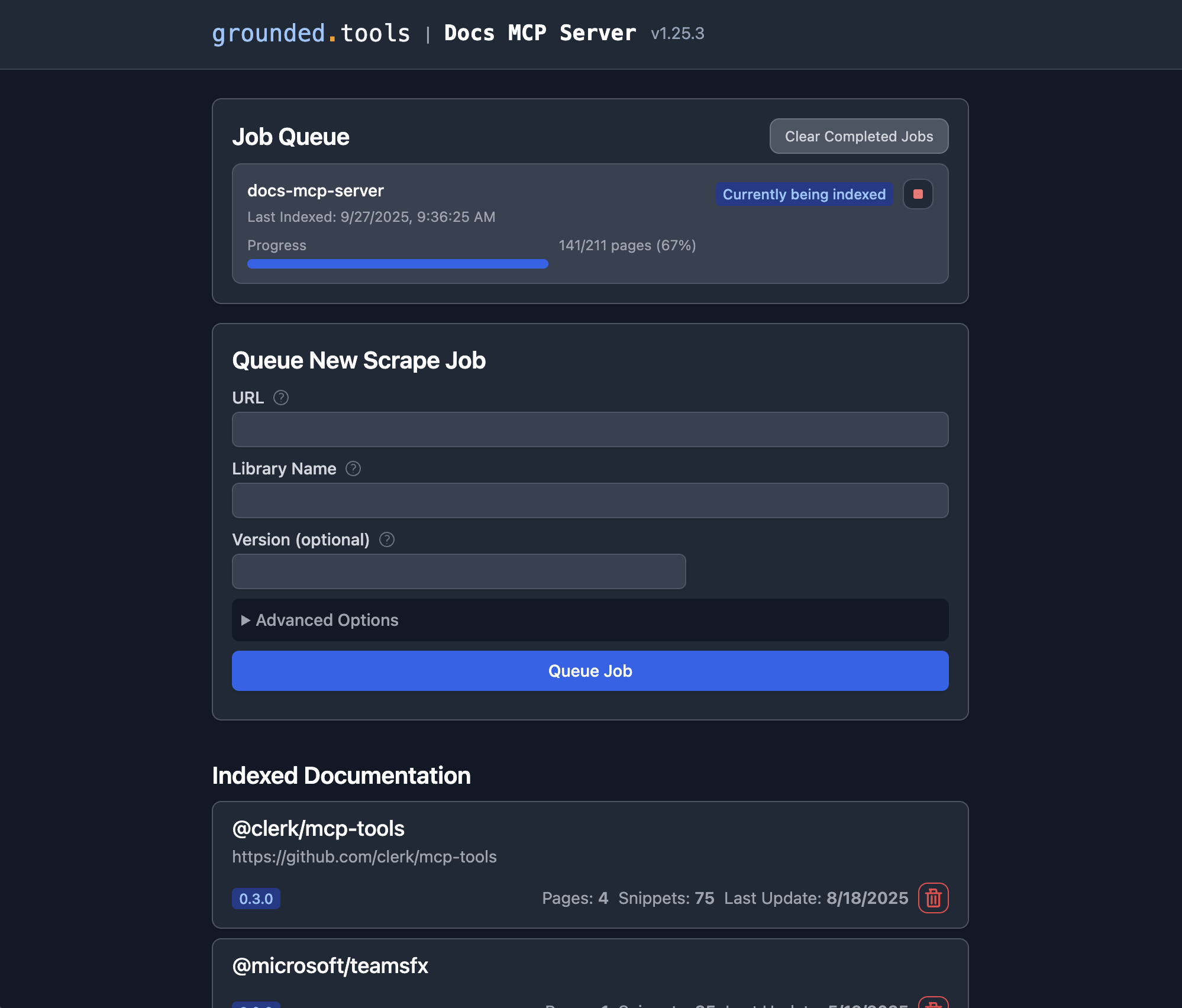

Open http://localhost:6280 in your browser to manage documentation and monitor jobs.

You can also use CLI commands to interact with the local database:

# List indexed libraries OPENAI_API_KEY="your-key" npx @arabold/docs-mcp-server@latest list # Search documentation OPENAI_API_KEY="your-key" npx @arabold/docs-mcp-server@latest search react "useState hook" # Scrape new documentation (connects to running server's worker) npx @arabold/docs-mcp-server@latest scrape react https://react.dev/reference/react --server-url http://localhost:6280/api

http://localhost:6280.Once a job completes, the docs are searchable via your AI assistant or the Web UI.

Benefits:

To stop the server, press Ctrl+C.

Run the MCP server directly embedded in your AI assistant without a separate process or web interface. This method provides MCP integration only.

Add this to your MCP settings (VS Code, Claude Desktop, etc.):

{ "mcpServers": { "docs-mcp-server": { "command": "npx", "args": ["@arabold/docs-mcp-server@latest"], "disabled": false, "autoApprove": [] } } }

Optional: To enable vector search for improved results, add an env section with your API key:

{ "mcpServers": { "docs-mcp-server": { "command": "npx", "args": ["@arabold/docs-mcp-server@latest"], "env": { "OPENAI_API_KEY": "sk-proj-..." // Your OpenAI API key }, "disabled": false, "autoApprove": [] } } }

Restart your application after updating the config.

Option 1: Use MCP Tools

Your AI assistant can index new documentation using the built-in scrape_docs tool:

Please scrape the React documentation from https://react.dev/reference/react for library "react" version "18.x"

Option 2: Launch Web Interface

Start a temporary web interface that shares the same database:

OPENAI_API_KEY="your-key" npx @arabold/docs-mcp-server@latest web --port 6281

Then open http://localhost:6281 to manage documentation. Stop the web interface when done (Ctrl+C).

Option 3: CLI Commands

Use CLI commands directly (avoid running scrape jobs concurrently with embedded server):

# List libraries OPENAI_API_KEY="your-key" npx @arabold/docs-mcp-server@latest list # Search documentation OPENAI_API_KEY="your-key" npx @arabold/docs-mcp-server@latest search react "useState hook"

Benefits:

Limitations:

You can index documentation from your local filesystem by using a file:// URL as the source. This works in both the Web UI and CLI.

Examples:

https://react.dev/reference/reactfile:///Users/me/docs/index.htmlfile:///Users/me/docs/my-libraryRequirements:

text/* are processed. This includes HTML, Markdown, plain text, and source code files such as .js, .ts, .tsx, .css, etc. Binary files, PDFs, images, and other non-text formats are ignored.file:// prefix for local files/folders.file:// URL.docker run --rm \ -e OPENAI_API_KEY="your-key" \ -v /absolute/path/to/docs:/docs:ro \ -v docs-mcp-data:/data \ -p 6280:6280 \ ghcr.io/arabold/docs-mcp-server:latest \ scrape mylib file:///docs/my-library

file:///docs/my-library (matching the container path).See the tooltips in the Web UI and CLI help for more details.

For production deployments or when you need to scale processing, use Docker Compose to run separate services. The system selects either a local in-process worker or a remote worker client based on the configuration, ensuring consistent behavior across modes.

Start the services:

# Clone the repository (to get docker-compose.yml) git clone https://github.com/arabold/docs-mcp-server.git cd docs-mcp-server # Set your environment variables export OPENAI_API_KEY="your-key-here" # Start all services docker compose up -d

Service architecture:

/sse endpoint for AI toolsConfigure your MCP client:

{ "mcpServers": { "docs-mcp-server": { "type": "sse", "url": "http://localhost:6280/sse", "disabled": false, "autoApprove": [] } } }

Alternative connection types:

// SSE (Server-Sent Events) "type": "sse", "url": "http://localhost:6280/sse" // HTTP (Streamable) "type": "http", "url": "http://localhost:6280/mcp"

Access interfaces:

http://localhost:6281http://localhost:6280/mcphttp://localhost:6280/sseThis architecture allows independent scaling of processing (workers) and user interfaces.

The Docs MCP Server can run without any configuration and will use full-text search only. To enable vector search for improved results, configure an embedding provider via environment variables.

Many CLI arguments can be overridden using environment variables. This is useful for Docker deployments, CI/CD pipelines, or setting default values.

| Environment Variable | CLI Argument | Description | Used by Commands |

|---|---|---|---|

DOCS_MCP_STORE_PATH | --store-path | Custom path for data storage directory | all |

DOCS_MCP_TELEMETRY | --no-telemetry | Disable telemetry (false to disable) | all |

DOCS_MCP_PROTOCOL | --protocol | MCP server protocol (auto, stdio, http) | default, mcp |

DOCS_MCP_PORT | --port | Server port | default, mcp, web, worker |

DOCS_MCP_WEB_PORT | --port (web command) | Web interface port (web command only) | web |

PORT | --port | Server port (fallback if DOCS_MCP_PORT not set) | default, mcp, web, worker |

DOCS_MCP_HOST | --host | Server host/bind address | default, mcp, web, worker |

HOST | --host | Server host (fallback if DOCS_MCP_HOST not set) | default, mcp, web, worker |

DOCS_MCP_EMBEDDING_MODEL | --embedding-model | Embedding model configuration | default, mcp, web, worker |

DOCS_MCP_AUTH_ENABLED | --auth-enabled | Enable OAuth2/OIDC authentication | default, mcp |

DOCS_MCP_AUTH_ISSUER_URL | --auth-issuer-url | OAuth2 provider issuer/discovery URL | default, mcp |

DOCS_MCP_AUTH_AUDIENCE | --auth-audience | JWT audience claim (resource identifier) | default, mcp |

Usage Examples:

# Set via environment variables export DOCS_MCP_PORT=8080 export DOCS_MCP_HOST=0.0.0.0 export DOCS_MCP_EMBEDDING_MODEL=text-embedding-3-small npx @arabold/docs-mcp-server@latest # Override with CLI arguments (takes precedence) DOCS_MCP_PORT=8080 npx @arabold/docs-mcp-server@latest --port 9090

The Docs MCP Server is configured via environment variables. Set these in your shell, Docker, or MCP client config.

| Variable | Description |

|---|---|

DOCS_MCP_EMBEDDING_MODEL | Embedding model to use (see below for options). |

OPENAI_API_KEY | OpenAI API key for embeddings. |

OPENAI_API_BASE | Custom OpenAI-compatible API endpoint (e.g., Ollama). |

GOOGLE_API_KEY | Google API key for Gemini embeddings. |

GOOGLE_APPLICATION_CREDENTIALS | Path to Google service account JSON for Vertex AI. |

AWS_ACCESS_KEY_ID | AWS key for Bedrock embeddings. |

AWS_SECRET_ACCESS_KEY | AWS secret for Bedrock embeddings. |

AWS_REGION | AWS region for Bedrock. |

AZURE_OPENAI_API_KEY | Azure OpenAI API key. |

AZURE_OPENAI_API_INSTANCE_NAME | Azure OpenAI instance name. |

AZURE_OPENAI_API_DEPLOYMENT_NAME | Azure OpenAI deployment name. |

AZURE_OPENAI_API_VERSION | Azure OpenAI API version. |

See examples above for usage.

Set DOCS_MCP_EMBEDDING_MODEL to one of:

text-embedding-3-small (default, OpenAI)openai:snowflake-arctic-embed2 (OpenAI-compatible, Ollama)vertex:text-embedding-004 (Google Vertex AI)gemini:embedding-001 (Google Gemini)aws:amazon.titan-embed-text-v1 (AWS Bedrock)microsoft:text-embedding-ada-002 (Azure OpenAI)Here are complete configuration examples for different embedding providers:

OpenAI (Default):

OPENAI_API_KEY="sk-proj-your-openai-api-key" \ DOCS_MCP_EMBEDDING_MODEL="text-embedding-3-small" \ npx @arabold/docs-mcp-server@latest

Ollama (Local):

OPENAI_API_KEY="ollama" \ OPENAI_API_BASE="http://localhost:11434/v1" \ DOCS_MCP_EMBEDDING_MODEL="nomic-embed-text" \ npx @arabold/docs-mcp-server@latest

LM Studio (Local):

OPENAI_API_KEY="lmstudio" \ OPENAI_API_BASE="http://localhost:1234/v1" \ DOCS_MCP_EMBEDDING_MODEL="text-embedding-qwen3-embedding-4b" \ npx @arabold/docs-mcp-server@latest

Google Gemini:

GOOGLE_API_KEY="your-google-api-key" \ DOCS_MCP_EMBEDDING_MODEL="gemini:embedding-001" \ npx @arabold/docs-mcp-server@latest

Google Vertex AI:

GOOGLE_APPLICATION_CREDENTIALS="/path/to/your/gcp-service-account.json" \ DOCS_MCP_EMBEDDING_MODEL="vertex:text-embedding-004" \ npx @arabold/docs-mcp-server@latest

AWS Bedrock:

AWS_ACCESS_KEY_ID="your-aws-access-key-id" \ AWS_SECRET_ACCESS_KEY="your-aws-secret-access-key" \ AWS_REGION="us-east-1" \ DOCS_MCP_EMBEDDING_MODEL="aws:amazon.titan-embed-text-v1" \ npx @arabold/docs-mcp-server@latest

Azure OpenAI:

AZURE_OPENAI_API_KEY="your-azure-openai-api-key" \ AZURE_OPENAI_API_INSTANCE_NAME="your-instance-name" \ AZURE_OPENAI_API_DEPLOYMENT_NAME="your-deployment-name" \ AZURE_OPENAI_API_VERSION="2024-02-01" \ DOCS_MCP_EMBEDDING_MODEL="microsoft:text-embedding-ada-002" \ npx @arabold/docs-mcp-server@latest

For more architectural details, see the ARCHITECTURE.md.

For enterprise authentication and security features, see the Authentication Guide.

The Docs MCP Server includes privacy-first telemetry to help improve the product. We collect anonymous usage data to understand how the tool is used and identify areas for improvement.

You can disable telemetry collection entirely:

Option 1: CLI Flag

npx @arabold/docs-mcp-server@latest --no-telemetry

Option 2: Environment Variable

DOCS_MCP_TELEMETRY=false npx @arabold/docs-mcp-server@latest

Option 3: Docker

docker run \ -e DOCS_MCP_TELEMETRY=false \ -v docs-mcp-data:/data \ -p 6280:6280 \ ghcr.io/arabold/docs-mcp-server:latest

For more details about our telemetry practices, see the Telemetry Guide.

To develop or contribute to the Docs MCP Server:

For questions or suggestions, open an issue.

For details on the project's architecture and design principles, please see ARCHITECTURE.md.

Notably, the vast majority of this project's code was generated by the AI assistant Cline, leveraging the capabilities of this very MCP server.

This project is licensed under the MIT License. See LICENSE for details.