Vision

STDIOComputer vision MCP server providing zero-shot object detection tools for language models.

Computer vision MCP server providing zero-shot object detection tools for language models.

A Model Context Protocol (MCP) server exposing HuggingFace computer vision models such as zero-shot object detection as tools, enhancing the vision capabilities of large language or vision-language models.

This repo is in active development. See below for details of currently available tools.

Clone the repo:

git clone [email protected]:groundlight/mcp-vision.git

Build a local docker image:

cd mcp-vision make build-docker

Add this to your claude_desktop_config.json:

If your local environment has access to a NVIDIA GPU:

"mcpServers": { "mcp-vision": { "command": "docker", "args": ["run", "-i", "--rm", "--runtime=nvidia", "--gpus", "all", "mcp-vision"], "env": {} } }

Or, CPU only:

"mcpServers": { "mcp-vision": { "command": "docker", "args": ["run", "-i", "--rm", "mcp-vision"], "env": {} } }

When running on CPU, the default large-size object detection model make take a long time to laod and run inference. Consider using a smaller model as DEFAULT_OBJDET_MODEL (you can tell Claude directly to use a specific model too).

(Beta) It is possible to run the public docker image directly without building locally, however the download time may interfere with Claude's loading of the server.

"mcpServers": { "mcp-vision": { "command": "docker", "args": ["run", "-i", "--rm", "--runtime=nvidia", "--gpus", "all", "groundlight/mcp-vision:latest"], "env": {} } }

The following tools are currently available through the mcp-vision server:

image_path (string) URL or file path, candidate_labels (list of strings) list of possible objects to detect, hf_model (optional string), will use "google/owlvit-large-patch14" by default, which could be slow on a non-GPU machineimage_path (string) URL or file path, label (string) object label to find and zoom and crop to, hf_model (optional), will use "google/owlvit-large-patch14" by default, which could be slow on a non-GPU machineRun Claude Desktop with Claude Sonnet 3.7 and mcp-vision configured as an MCP server in claude_desktop_config.json.

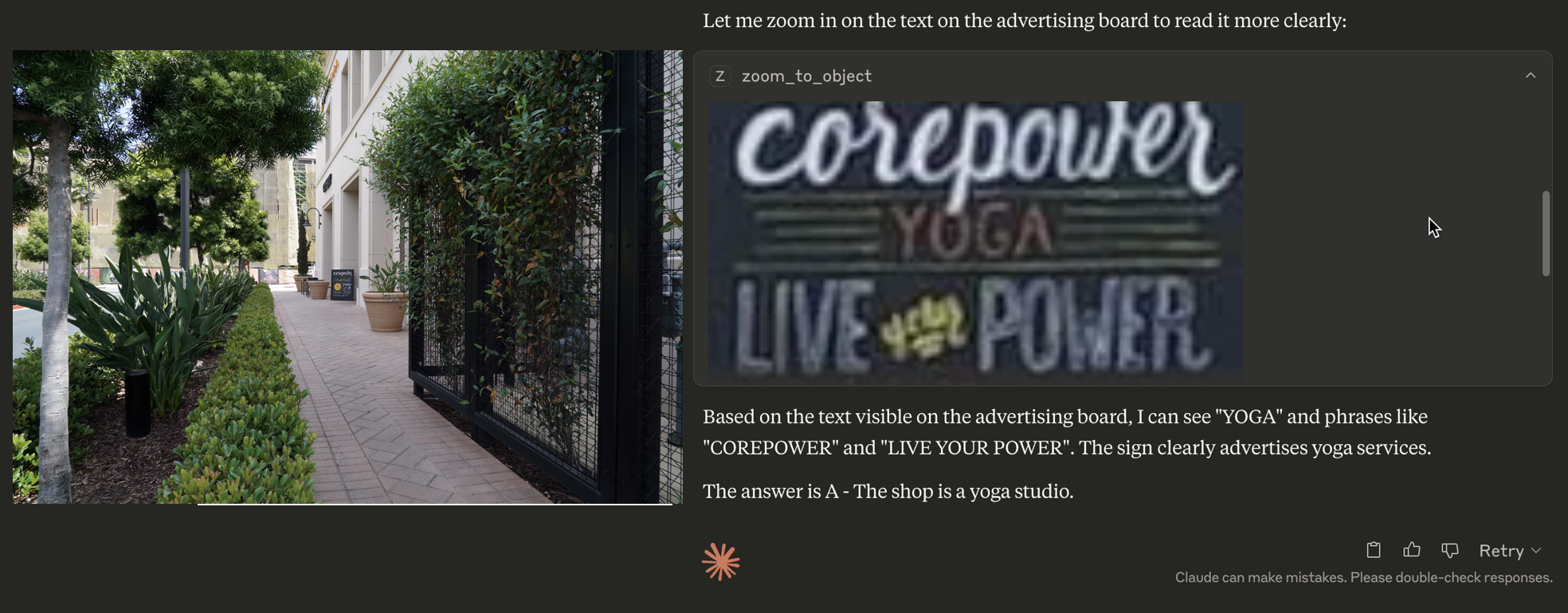

The prompt used in the example video and blog post was:

From the information on that advertising board, what is the type of this shop?

Options:

The shop is a yoga studio.

The shop is a cafe.

The shop is a seven-eleven.

The shop is a milk tea shop.

The image is the first image in the V*Bench/GPT4V-hard dataset and can be found here: https://huggingface.co/datasets/craigwu/vstar_bench/blob/main/GPT4V-hard/0.JPG (use the download link).

Note:

Run locally using the uv package manager:

uv install uv run python mcp_vision

Build the Docker image locally:

make build-docker

Run the Docker image locally:

make run-docker-cpu

or

make run-docker-gpu

[Groundlight Internal] Push the Docker image to Docker Hub (requires DockerHub credentials):

make push-docker

If Claude Desktop is failing to connect to mcp-vision:

On accounts that have web search enabled, Claude will prefer to use web search over local MCP tools AFAIK. Disable web search for best results.