Readwise Vector

STDIOSelf-hosted semantic search engine for Readwise library highlights with MCP server support.

Self-hosted semantic search engine for Readwise library highlights with MCP server support.

Turn your Readwise library into a blazing-fast semantic search engine – complete with nightly syncs, vector search API, Prometheus metrics, and a streaming MCP server for LLM clients.

# ❶ Clone & install git clone https://github.com/leonardsellem/readwise-vector-db.git cd readwise-vector-db poetry install --sync # ❷ Boot DB & run the API (localhost:8000) docker compose up -d db poetry run uvicorn readwise_vector_db.api:app --reload # ❸ Verify curl http://127.0.0.1:8000/health # → {"status":"ok"} open http://127.0.0.1:8000/docs # interactive swagger UI

Tip: Codespaces user? Click "Run → Open in Browser" after step ❷.

Skip the local Docker setup and use a managed PostgreSQL with pgvector support:

# ❶ Create Supabase project at https://supabase.com/dashboard # ❷ Enable pgvector extension in SQL Editor: # CREATE EXTENSION IF NOT EXISTS vector; # ❸ Set up environment export DB_BACKEND=supabase export SUPABASE_DB_URL="postgresql://postgres:[password]@db.[project].supabase.co:6543/postgres?options=project%3D[project]" export READWISE_TOKEN=xxxx export OPENAI_API_KEY=sk-... # ❹ Run migrations and start the API poetry run alembic upgrade head poetry run uvicorn readwise_vector_db.api:app --reload # ❺ Initial sync poetry run rwv sync --backfill

⚠️ Fail-fast behavior: The application will raise

ValueErrorimmediately on startup ifSUPABASE_DB_URLis missing whenDB_BACKEND=supabase.

Environment Variables Required:

DB_BACKEND=supabase – Switches from local Docker to SupabaseSUPABASE_DB_URL – Full PostgreSQL connection string from Supabase dashboardREADWISE_TOKEN, OPENAI_API_KEYBenefits:

Deploy the FastAPI app as a serverless function with Supabase backend:

# ❶ Set up Vercel project npm install -g vercel vercel login vercel link # or vercel --confirm for new project # ❶ Configure environment variables in Vercel dashboard or CLI: vercel env add SUPABASE_DB_URL vercel env add READWISE_TOKEN vercel env add OPENAI_API_KEY # ❸ Deploy vercel --prod

Automatic Configuration:

DEPLOY_TARGET=vercel – Automatically set by Vercel environmentDB_BACKEND=supabase – Pre-configured in vercel.jsonvercel_build.sh scriptResource Limits:

SSE Streaming Support:

/mcp/stream endpoint works seamlesslyGitHub Integration:

v*.*.*) automatically deploy to production💡 Pro tip: Use

vercel --prebuiltfor faster subsequent deployments.

Traditional TCP MCP servers don't work in serverless environments because they require persistent connections. The HTTP-based MCP Server with Server-Sent Events (SSE) solves this by providing:

| Feature | TCP MCP Server | HTTP SSE MCP Server |

|---|---|---|

| Serverless Support | ❌ Requires persistent connections | ✅ Works on Vercel, Lambda, etc. |

| Firewall/Proxy | ⚠️ May require custom ports | ✅ Standard HTTP/HTTPS (80/443) |

| Browser Support | ❌ No native support | ✅ EventSource API built-in |

| Auto-scaling | ⚠️ Limited by connection pooling | ✅ Infinite scaling via HTTP infrastructure |

| Cold Starts | ❌ Connection drops during restarts | ✅ Stateless, reconnects automatically |

| HTTP/2 Benefits | ❌ Not applicable | ✅ Multiplexing, header compression |

Use the SSE endpoint for production deployments on cloud platforms. The TCP server remains available for local development and dedicated server deployments.

📚 Comprehensive deployment guide: See docs/deployment-sse.md for detailed platform-specific instructions, troubleshooting, and performance tuning.

• Python 3.12 | Poetry ≥ 1.8 | Docker + Compose

Create .env (see .env.example) – minimal:

READWISE_TOKEN=xxxx # get from readwise.io/api_token OPENAI_API_KEY=sk-... DATABASE_URL=postgresql+asyncpg://rw_user:rw_pass@localhost:5432/readwise

All variables are documented in docs/env.md.

docker compose up -d db # Postgres 16 + pgvector poetry run alembic upgrade head

# first-time full sync poetry run rwv sync --backfill # daily incremental (fetch since yesterday) poetry run rwv sync --since $(date -Idate -d 'yesterday')

curl -X POST http://127.0.0.1:8000/search \ -H 'Content-Type: application/json' \ -d '{ "q": "Large Language Models", "k": 10, "filters": { "source": "kindle", "tags": ["ai", "research"], "highlighted_at": ["2024-01-01", "2024-12-31"] } }'

# Real-time streaming via Server-Sent Events (serverless-friendly) curl -N -H "Accept: text/event-stream" \ "http://127.0.0.1:8000/mcp/stream?q=neural+networks&k=10"

poetry run python -m readwise_vector_db.mcp --host 0.0.0.0 --port 8375 & # then from another shell printf '{"jsonrpc":"2.0","id":1,"method":"search","params":{"q":"neural networks"}}\n' | \ nc 127.0.0.1 8375

💡 New: Check out the SSE Usage Guide for JavaScript, Python, and browser examples!

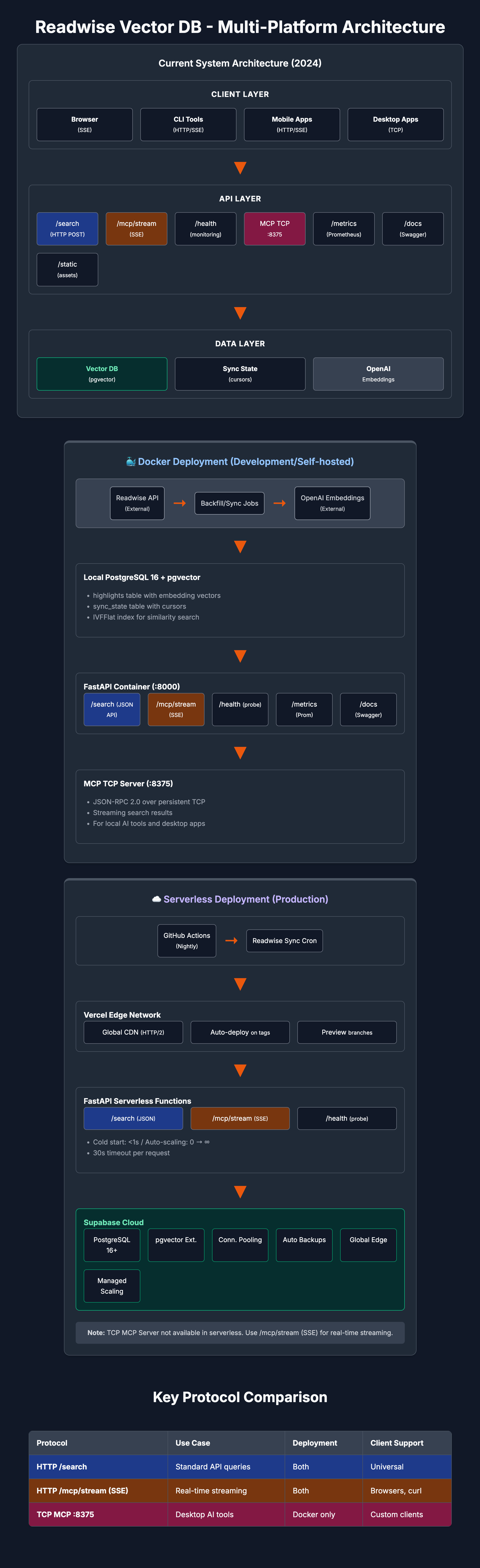

The system supports multiple deployment patterns to fit different infrastructure needs:

flowchart TB subgraph "🐳 Docker Deployment" subgraph Ingestion A[Readwise API] --> B[Backfill Job] C[Nightly Cron] --> D[Incremental Job] end B --> E[OpenAI Embeddings] D --> E E --> F[Local PostgreSQL + pgvector] F --> G[FastAPI Container] G --> H[MCP Server :8375] G --> I[Prometheus /metrics] end

flowchart TB subgraph Serverless_Deployment subgraph Vercel_Edge J[FastAPI Serverless] K[/health endpoint/] L[/search endpoint/] M[/docs Swagger UI/] J --> K J --> L J --> M end subgraph Supabase_Cloud N[Managed PostgreSQL] O[pgvector Extension] P[Automated Backups] N --> O P --> N end J -.-> N Q[GitHub Actions] R[Auto Deploy on Tags] Q --> R R --> J end

Key Differences:

Documentation:

poetry install --with dev poetry run pre-commit install # black, isort, ruff, mypy, markdownlint

poetry run coverage run -m pytest && coverage report

make perf) – fails if /search P95 >500 ms.feat:, fix: …)..editorconfig and enforced linters.See CONTRIBUTING.md for full guidelines.

.github/workflows/ci.yml runs lint, type-check, tests (Py 3.11 + 3.12) and publishes images to GHCR.pg_dump weekly cron uploads compressed dump as artifact (Goal G4).pyproject.toml, run make release.Code licensed under the MIT License. Made with ❤️ by the community, powered by FastAPI, SQLModel, pgvector, OpenAI and Taskmaster-AI.