PromptLab

STDIOAI query enhancement system with MLflow Prompt Registry integration

AI query enhancement system with MLflow Prompt Registry integration

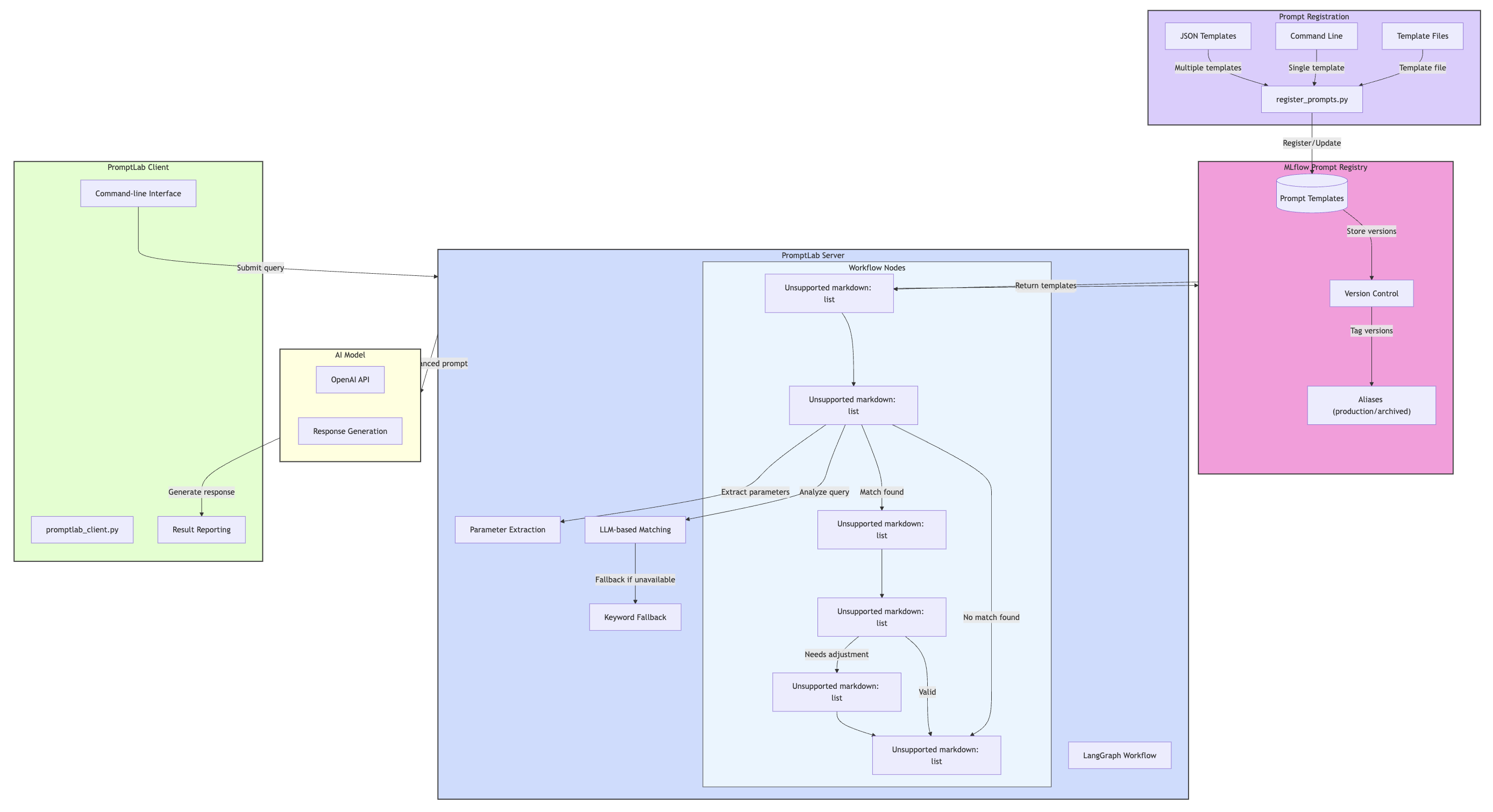

PromptLab is an intelligent system that transforms basic user queries into optimized prompts for AI systems using MLflow Prompt Registry. It dynamically matches user requests to the most appropriate prompt template and applies it with extracted parameters.

PromptLab combines MLflow Prompt Registry with dynamic prompt matching to create a powerful, flexible system for prompt engineering:

The system consists of three main components:

register_prompts.py) - Tool for registering and managing prompts in MLflowpromptlab_server.py) - Server with dynamic prompt matching and LangGraph workflowpromptlab_client.py) - Lightweight client for processing user queries

promptlab/

├── promptlab_server.py # Main server with LangGraph workflow

├── promptlab_client.py # Client for processing queries

├── register_prompts.py # MLflow prompt management tool

├── requirements.txt # Project dependencies

├── advanced_prompts.json # Additional prompt templates

└── README.md # Project documentation

register_prompts.pyregister_prompt(): Register a new prompt or versionupdate_prompt(): Update an existing prompt (archives previous production)list_prompts(): List all registered promptsregister_from_file(): Register multiple prompts from JSONregister_sample_prompts(): Initialize with standard promptspromptlab_server.pyload_all_prompts(): Loads prompts from MLflowmatch_prompt(): Matches queries to appropriate templatesenhance_query(): Applies selected templatevalidate_query(): Validates enhanced queriesLangGraph workflow: Orchestrates the query enhancement processpromptlab_client.pyrequirements.txt# Clone the repository git clone https://github.com/iRahulPandey/PromptLab.git cd PromptLab # Install dependencies pip install -r requirements.txt # Set environment variables export OPENAI_API_KEY="your-openai-api-key"

Before using PromptLab, you need to register prompts in MLflow:

# Register sample prompts (essay, email, technical, creative) python register_prompts.py register-samples # Register additional prompt types (recommended) python register_prompts.py register-file --file advanced_prompts.json # Verify registered prompts python register_prompts.py list

# Start the server python promptlab_server.py

# Process a query python promptlab_client.py "Write a blog post about machine learning" # List available prompts python promptlab_client.py --list # Enable verbose output python promptlab_client.py --verbose "Create a presentation on climate change"

PromptLab supports a wide range of prompt types:

| Prompt Type | Description | Example Use Case |

|---|---|---|

| essay_prompt | Academic writing | Research papers, analyses |

| email_prompt | Email composition | Professional communications |

| technical_prompt | Technical explanations | Concepts, technologies |

| creative_prompt | Creative writing | Stories, poems, fiction |

| code_prompt | Code generation | Functions, algorithms |

| summary_prompt | Content summarization | Articles, documents |

| analysis_prompt | Critical analysis | Data, texts, concepts |

| qa_prompt | Question answering | Context-based answers |

| social_media_prompt | Social media content | Platform-specific posts |

| blog_prompt | Blog article writing | Online articles |

| report_prompt | Formal reports | Business, technical reports |

| letter_prompt | Formal letters | Cover, recommendation letters |

| presentation_prompt | Presentation outlines | Slides, talks |

| review_prompt | Reviews | Products, media, services |

| comparison_prompt | Comparisons | Products, concepts, options |

| instruction_prompt | How-to guides | Step-by-step instructions |

| custom_prompt | Customizable template | Specialized use cases |

You can register new prompts in several ways:

python register_prompts.py register \ --name "new_prompt" \ --template "Your template with {{ variables }}" \ --message "Initial version" \ --tags '{"type": "custom", "task": "specialized"}'

# Create a text file with your template echo "Template content with {{ variables }}" > template.txt # Register using the file python register_prompts.py register \ --name "long_prompt" \ --template template.txt \ --message "Complex template"

Create a JSON file with multiple prompts:

{ "prompts": [ { "name": "prompt_name", "template": "Template with {{ variables }}", "commit_message": "Description", "tags": {"type": "category", "task": "purpose"} } ] }

Then register them:

python register_prompts.py register-file --file your_prompts.json

When you update an existing prompt, the system automatically:

python register_prompts.py update \ --name "essay_prompt" \ --template "New improved template with {{ variables }}" \ --message "Enhanced clarity and structure"

# List all prompts python register_prompts.py list # View detailed information about a specific prompt python register_prompts.py details --name "essay_prompt"

Templates use variables in {{ variable }} format:

Write a {{ formality }} email to my {{ recipient_type }} about {{ topic }} that includes:

- A clear subject line

- Appropriate greeting

...

When matching a query, the system automatically extracts values for these variables.

Each prompt can have different versions with aliases:

This allows for:

For specialized use cases, you can create highly customized prompts:

python register_prompts.py register \ --name "specialized_prompt" \ --template "You are a {{ role }} with expertise in {{ domain }}. Create a {{ document_type }} about {{ topic }} that demonstrates {{ quality }}." \ --message "Specialized template" \ --tags '{"type": "custom", "task": "specialized", "domain": "finance"}'

If the system can't match a query to any prompt template, it will:

You can add more diverse prompt templates to improve matching.

If the LLM service is unavailable, the system falls back to:

This ensures the system remains functional even without LLM access.