Code Reasoning

STDIOMCP server that enhances Claude's ability to solve complex programming tasks through structured step-by-step thinking.

MCP server that enhances Claude's ability to solve complex programming tasks through structured step-by-step thinking.

A Model Context Protocol (MCP) server that enhances Claude's ability to solve complex programming tasks through structured, step-by-step thinking.

Configure Claude Desktop by editing:

~/Library/Application Support/Claude/claude_desktop_config.json%APPDATA%\Claude\claude_desktop_config.json~/.config/Claude/claude_desktop_config.json{ "mcpServers": { "code-reasoning": { "command": "npx", "args": ["-y", "@mettamatt/code-reasoning"] } } }

Configure VS Code:

{ "mcp": { "servers": { "code-reasoning": { "command": "npx", "args": ["-y", "@mettamatt/code-reasoning"] } } } }

To trigger this MCP, append this to your chat messages:

Use sequential thinking to reason about this.

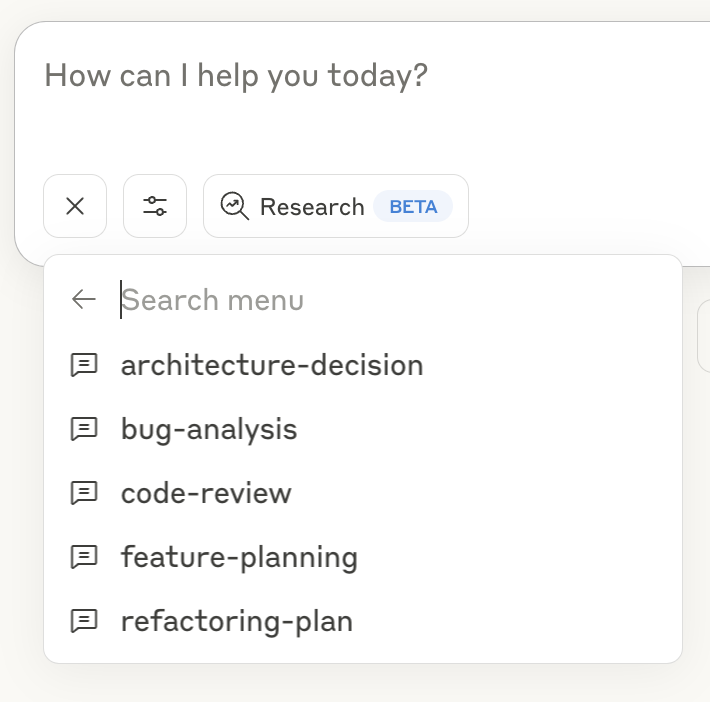

Use ready-to-go prompts that trigger Code-Reasoning:

/help to see the specific commands.See the Prompts Guide for details on using the prompt templates.

--debug: Enable detailed logging--help or -h: Show help informationDetailed documentation available in the docs directory:

├── index.ts # Entry point

├── src/ # Implementation source files

└── test/ # Placeholder for future test utilities

This project is licensed under the MIT License. See the LICENSE file for details.