Android UI

STDIOReal-time Android UI screenshot and analysis MCP server for AI development workflows

Real-time Android UI screenshot and analysis MCP server for AI development workflows

Model Context Protocol server that enables AI coding agents to see and analyze your Android app UI in real-time during development. Perfect for iterative UI refinement with Expo, React Native, Flutter, and native Android development workflows. Connect your AI agent to your running app and get instant visual feedback on UI changes.

Keywords: android development ai agent, real-time ui feedback, expo development tools, react native ui assistant, flutter development ai, android emulator screenshot, ai powered ui testing, visual regression testing ai, mobile app development ai, iterative ui development, ai code assistant android

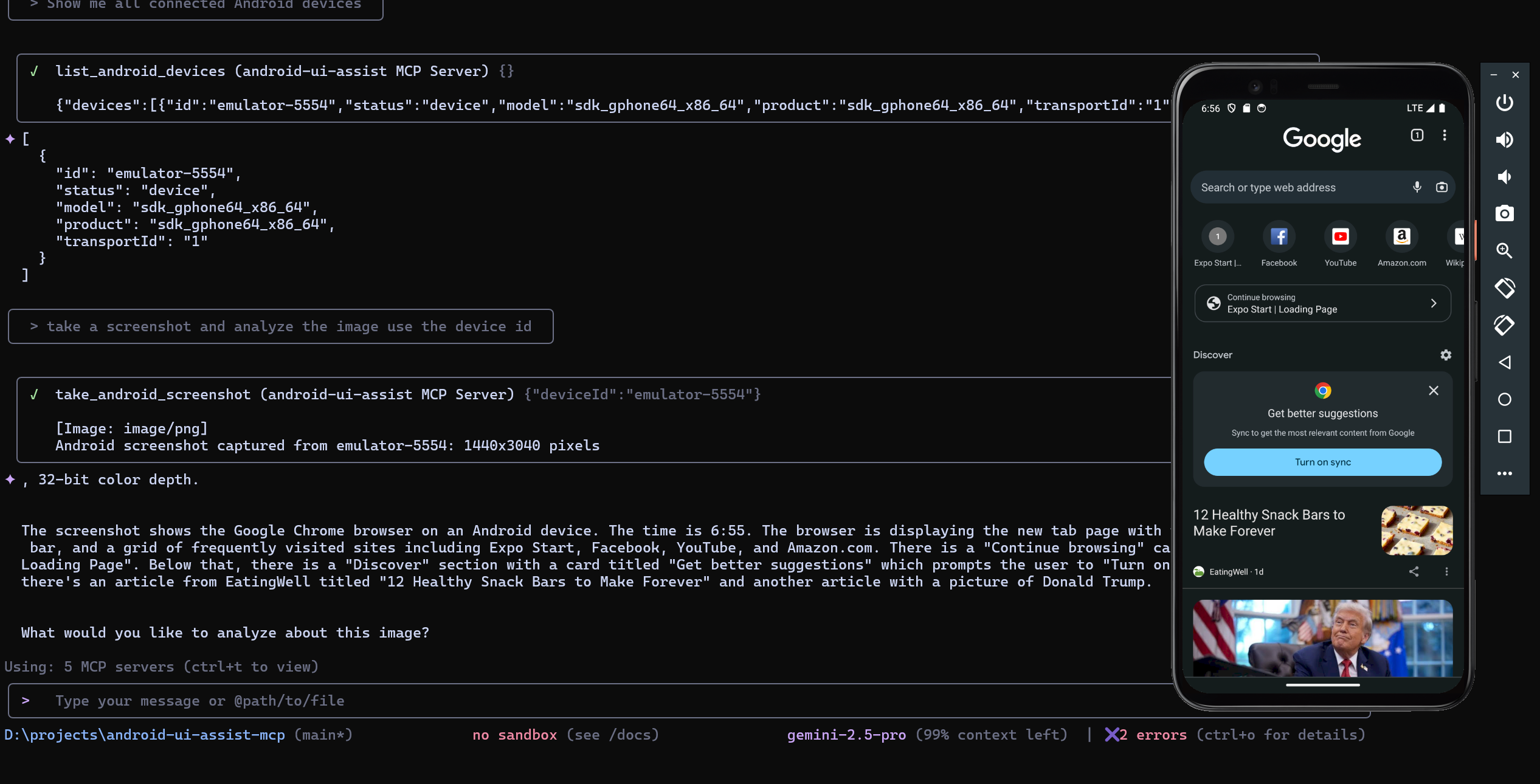

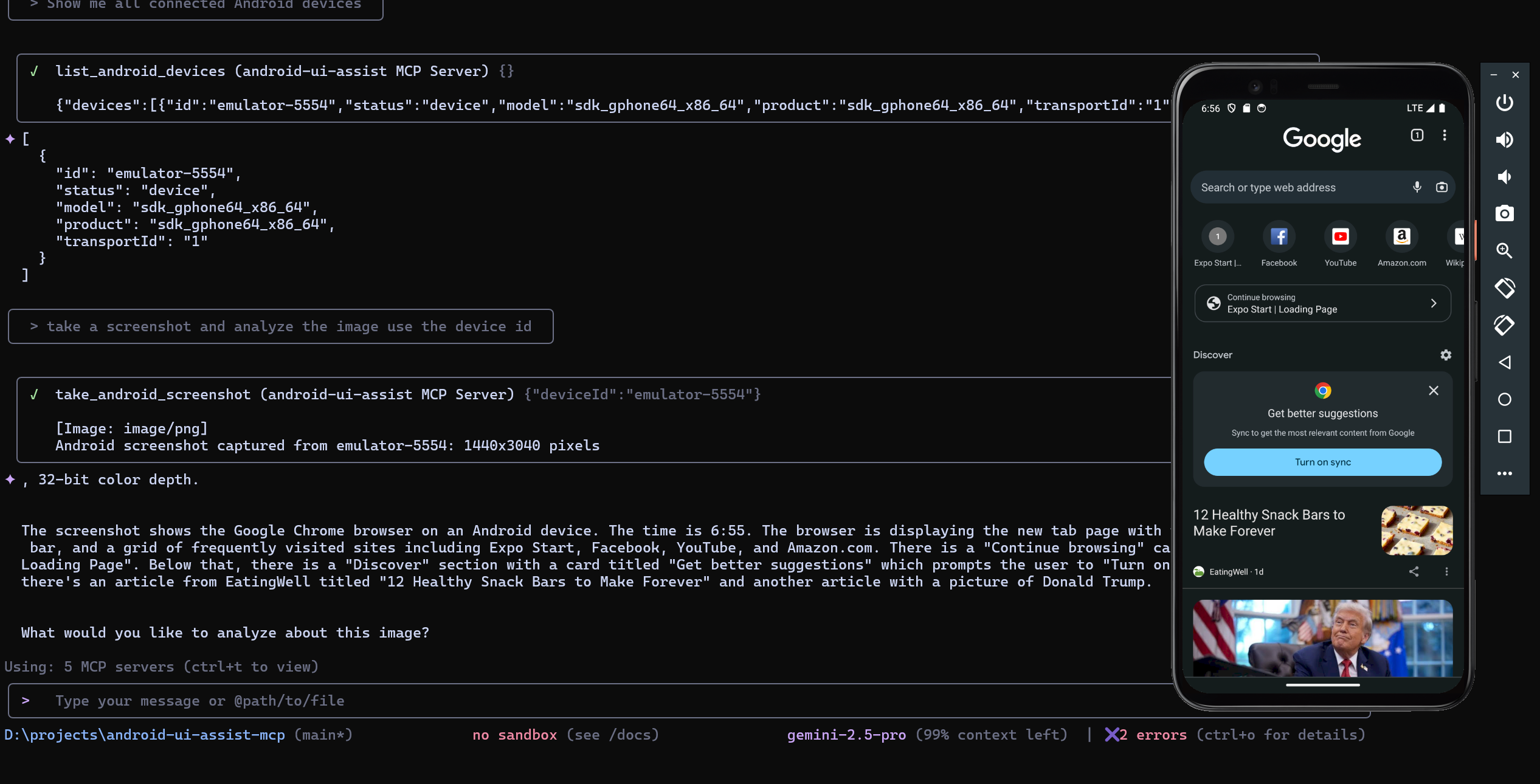

See the MCP server in action with real-time Android UI analysis:

| MCP Server Status | Live Development Workflow |

|---|---|

|  |

| Server ready with 2 tools available | AI agent analyzing Android UI in real-time |

Real-Time Development Workflow

AI Agent Integration

Developer Experience

This MCP server works with AI agents that support the Model Context Protocol. Configure your preferred agent to enable real-time Android UI analysis:

# CLI Installation claude mcp add android-ui-assist -- npx android-ui-assist-mcp # Local Development claude mcp add android-ui-assist -- node "D:\\projects\\android-ui-assist-mcp\\dist\\index.js"

Add to %APPDATA%\Claude\claude_desktop_config.json:

{ "mcpServers": { "android-ui-assist": { "command": "npx", "args": ["android-ui-assist-mcp"], "timeout": 10000 } } }

Add to .vscode/settings.json:

{ "github.copilot.enable": { "*": true }, "mcp.servers": { "android-ui-assist": { "command": "npx", "args": ["android-ui-assist-mcp"], "timeout": 10000 } } }

# CLI Installation gemini mcp add android-ui-assist npx android-ui-assist-mcp # Configuration # Create ~/.gemini/settings.json with: { "mcpServers": { "android-ui-assist": { "command": "npx", "args": ["android-ui-assist-mcp"] } } }

npm install -g android-ui-assist-mcp

git clone https://github.com/yourusername/android-ui-assist-mcp cd android-ui-assist-mcp npm install && npm run build

After installation, verify the package is available:

android-ui-assist-mcp --version # For npm installation npx android-ui-assist-mcp --version

This MCP server transforms how you develop Android UIs by giving AI agents real-time visual access to your running application. Here's the typical workflow:

Perfect for:

| Component | Version | Installation |

|---|---|---|

| Node.js | 18.0+ | Download |

| npm | 8.0+ | Included with Node.js |

| ADB | Latest | Android SDK Platform Tools |

adb devicesnpx expo start # or npm start

adb devicesnpx react-native start

npx react-native run-android

flutter run

r) and hot restart (R) while getting AI feedbackcd docker docker-compose up --build -d

Configure AI platform for Docker:

{ "mcpServers": { "android-ui-assist": { "command": "docker", "args": ["exec", "android-ui-assist-mcp", "node", "/app/dist/index.js"], "timeout": 15000 } } }

docker build -t android-ui-assist-mcp . docker run -it --rm --privileged -v /dev/bus/usb:/dev/bus/usb android-ui-assist-mcp

| Tool | Description | Parameters |

|---|---|---|

take_android_screenshot | Captures device screenshot | deviceId (optional) |

list_android_devices | Lists connected devices | None |

take_android_screenshot

{ "name": "take_android_screenshot", "description": "Capture a screenshot from an Android device or emulator", "inputSchema": { "type": "object", "properties": { "deviceId": { "type": "string", "description": "Optional device ID. If not provided, uses the first available device" } } } }

list_android_devices

{ "name": "list_android_devices", "description": "List all connected Android devices and emulators with detailed information", "inputSchema": { "type": "object", "properties": {} } }

Example: AI agent listing devices, capturing screenshots, and providing detailed UI analysis in real-time

With your development environment running (Expo, React Native, Flutter, etc.), interact with your AI agent:

Initial Analysis:

Iterative Development:

Cross-Platform Testing:

Development Debugging:

adb devices shows your device as "device" statusadb kill-server && adb start-servernpm run build # Production build npm test # Run tests npm run lint # Code linting npm run format # Code formatting

src/

├── server.ts # MCP server implementation

├── types.ts # Type definitions

├── utils/

│ ├── adb.ts # ADB command utilities

│ ├── screenshot.ts # Screenshot processing

│ └── error.ts # Error handling

└── index.ts # Entry point

MIT License - see LICENSE file for details.