Airflow

STDIOMCP server for Apache Airflow management using natural language queries and comprehensive API operations

MCP server for Apache Airflow management using natural language queries and comprehensive API operations

Revolutionary Open Source Tool for Managing Apache Airflow with Natural Language

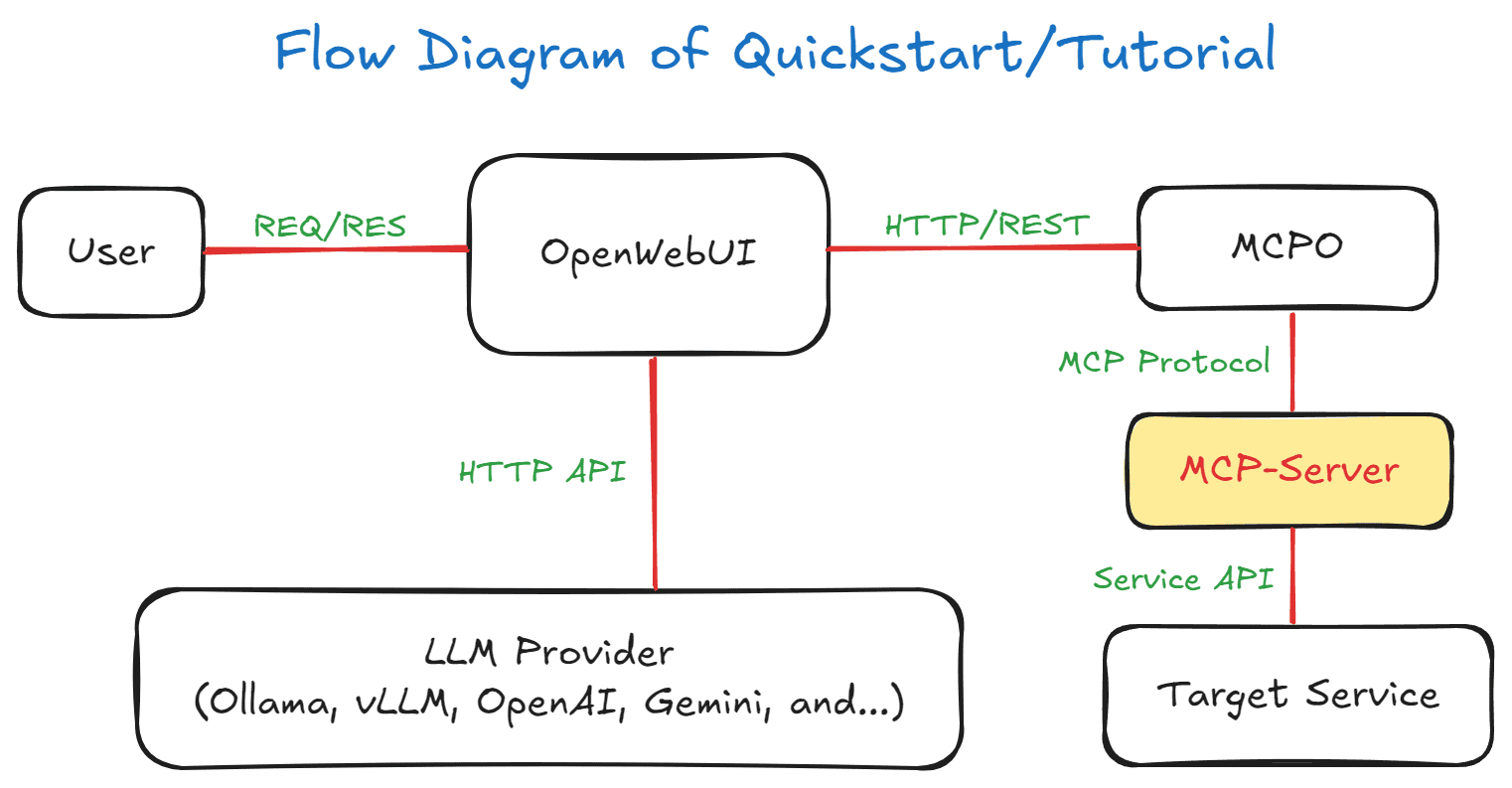

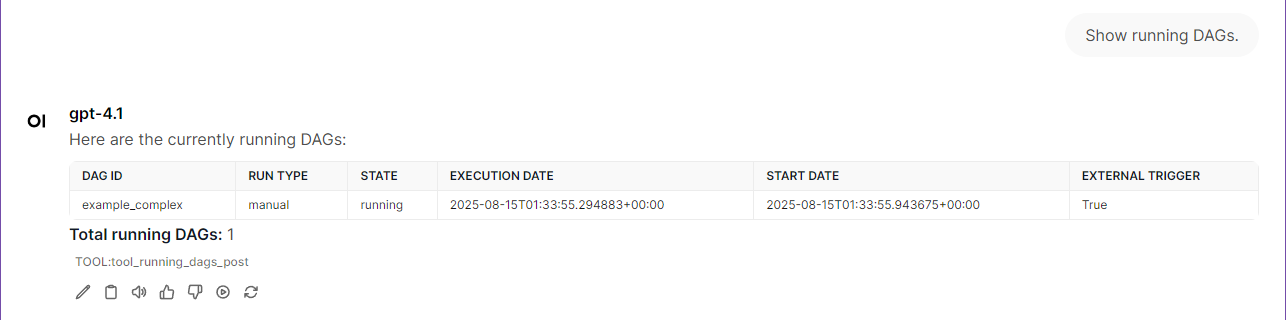

Have you ever wondered how amazing it would be if you could manage your Apache Airflow workflows using natural language instead of complex REST API calls or web interface manipulations? MCP-Airflow-API is the revolutionary open-source project that makes this goal a reality.

MCP-Airflow-API is an MCP server that leverages the Model Context Protocol (MCP) to transform Apache Airflow REST API operations into natural language tools. This project hides the complexity of API structures and enables intuitive management of Airflow clusters through natural language commands.

Now supports both Airflow API v1 (2.x) and v2 (3.0+) with dynamic version selection via environment variable:

Key Architecture: Single MCP server with shared common tools (43) plus v2-exclusive asset tools (2) - dynamically loads appropriate toolset based on AIRFLOW_API_VERSION environment variable!

Traditional approach (example):

curl -X GET "http://localhost:8080/api/v1/dags?limit=100&offset=0" \ -H "Authorization: Basic YWlyZmxvdzphaXJmbG93"

MCP-Airflow-API approach (natural language):

"Show me the currently running DAGs"

📝 Need a test Airflow cluster? Use our companion project Airflow-Docker-Compose with support for both Airflow 2.x and Airflow 3.x environments!

For quick evaluation and testing:

git clone https://github.com/call518/MCP-Airflow-API.git cd MCP-Airflow-API # Configure your Airflow credentials cp .env.example .env # Edit .env with your Airflow API settings # Start all services docker-compose up -d # Access OpenWebUI at http://localhost:3002/ # API documentation at http://localhost:8002/docs

http://localhost:8002/airflow-apiuvx --python 3.12 mcp-airflow-api

Local Access (stdio mode)

{ "mcpServers": { "mcp-airflow-api": { "command": "uvx", "args": ["--python", "3.12", "mcp-airflow-api"], "env": { "AIRFLOW_API_VERSION": "v2", "AIRFLOW_API_BASE_URL": "http://localhost:8080/api", "AIRFLOW_API_USERNAME": "airflow", "AIRFLOW_API_PASSWORD": "airflow" } } } }

Remote Access (streamable-http mode without authentication)

{ "mcpServers": { "mcp-airflow-api": { "type": "streamable-http", "url": "http://localhost:8000/mcp" } } }

Remote Access (streamable-http mode with Bearer token authentication - Recommended)

{ "mcpServers": { "mcp-airflow-api": { "type": "streamable-http", "url": "http://localhost:8000/mcp", "headers": { "Authorization": "Bearer your-secure-secret-key-here" } } } }

Multiple Airflow Clusters with Different Versions

{ "mcpServers": { "airflow-2x-cluster": { "command": "uvx", "args": ["--python", "3.12", "mcp-airflow-api"], "env": { "AIRFLOW_API_VERSION": "v1", "AIRFLOW_API_BASE_URL": "http://localhost:38080/api", "AIRFLOW_API_USERNAME": "airflow", "AIRFLOW_API_PASSWORD": "airflow" } }, "airflow-3x-cluster": { "command": "uvx", "args": ["--python", "3.12", "mcp-airflow-api"], "env": { "AIRFLOW_API_VERSION": "v2", "AIRFLOW_API_BASE_URL": "http://localhost:48080/api", "AIRFLOW_API_USERNAME": "airflow", "AIRFLOW_API_PASSWORD": "airflow" } } } }

💡 Pro Tip: Use the test clusters from Airflow-Docker-Compose for the above configuration - they run on ports 38080 (2.x) and 48080 (3.x) respectively!

git clone https://github.com/call518/MCP-Airflow-API.git cd MCP-Airflow-API pip install -e . # Run in stdio mode python -m mcp_airflow_api

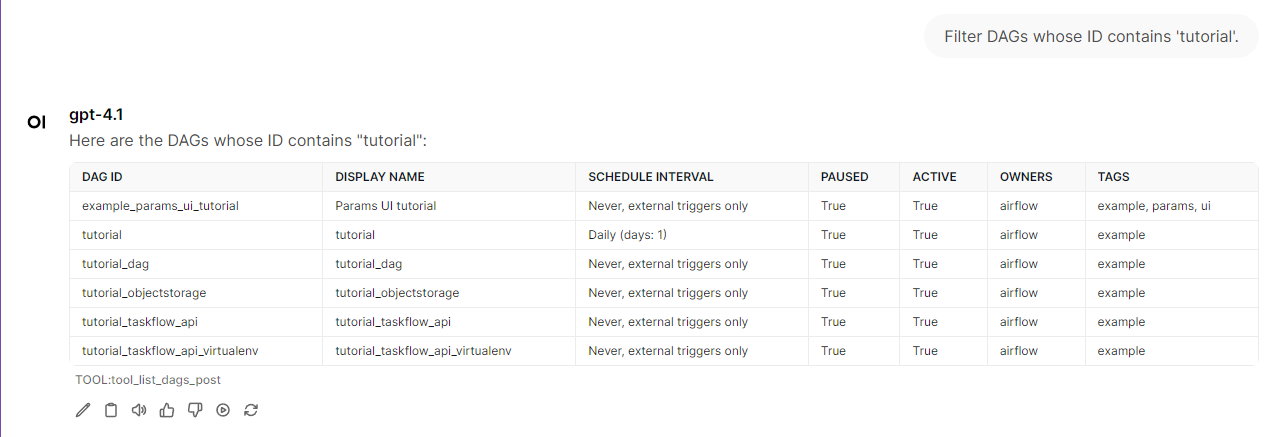

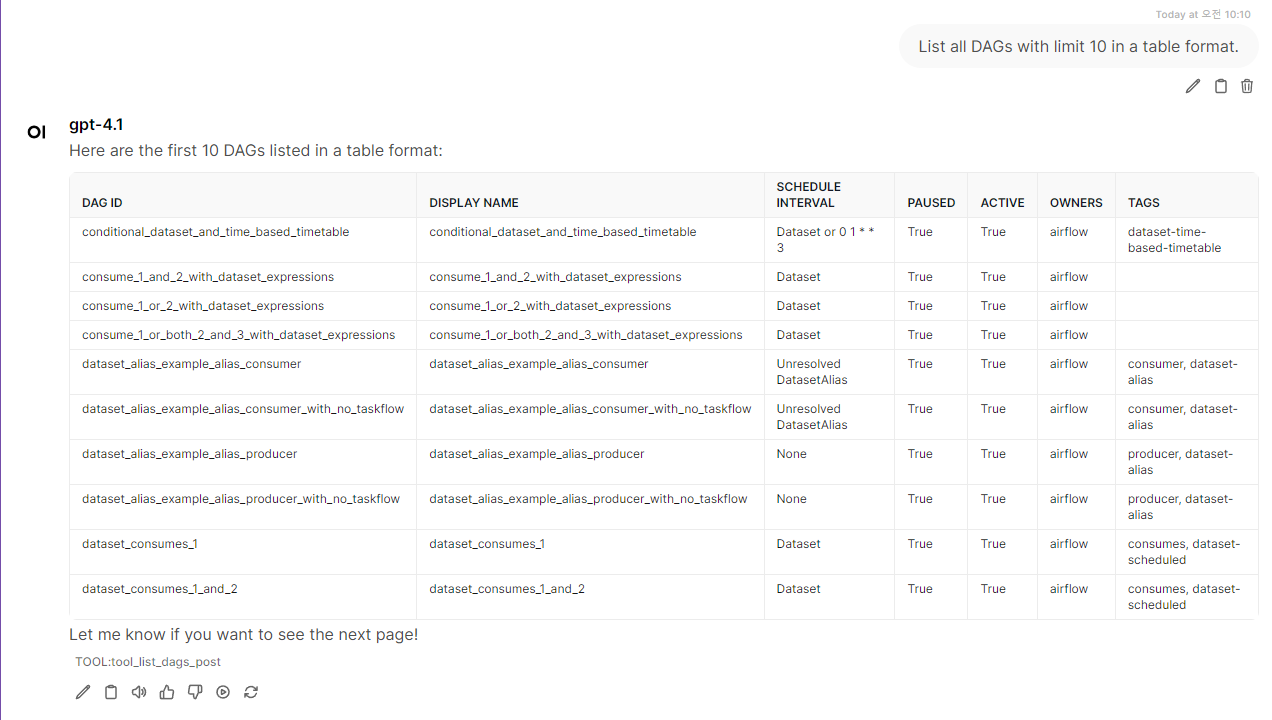

Natural Language Queries

No need to learn complex API syntax. Just ask as you would naturally speak:

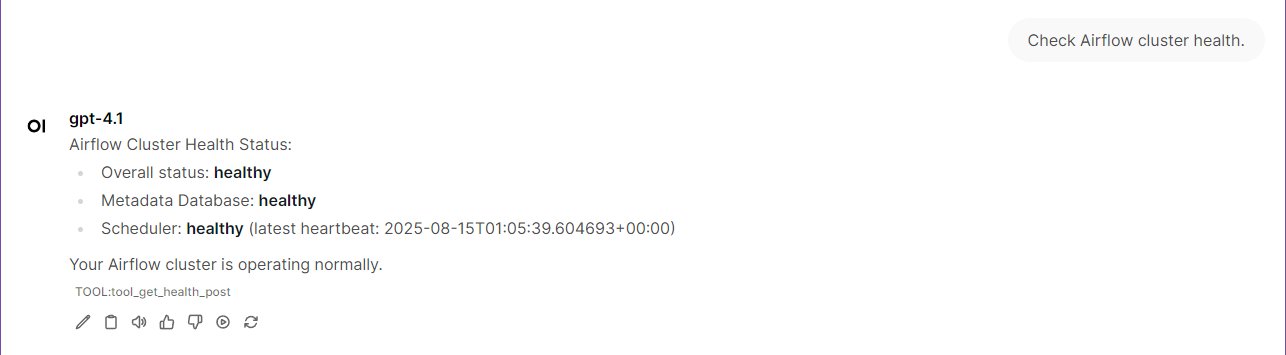

Comprehensive Monitoring Capabilities

Real-time cluster status monitoring:

Dynamic API Version Support

Single MCP server adapts to your Airflow version:

AIRFLOW_API_VERSIONComprehensive Tool Coverage

Covers almost all Airflow API functionality:

Large Environment Optimization

Efficiently handles large environments with 1000+ DAGs:

Leveraging Model Context Protocol (MCP)

MCP is an open standard for secure connections between AI applications and data sources, providing:

Support for Two Transport Modes

stdio mode: Direct MCP client integration for local environmentsstreamable-http mode: HTTP-based deployment for Docker and remote accessEnvironment Variable Control:

FASTMCP_TYPE=stdio # Default: Direct MCP client mode FASTMCP_TYPE=streamable-http # Docker/HTTP mode FASTMCP_PORT=8000 # HTTP server port (Docker internal)

Comprehensive Airflow API Coverage

Full implementation of official Airflow REST APIs:

Complete Docker Support

Full Docker Compose setup with 3 separate services:

3002)8000, exposed via 18002)8002)

# Required - Dynamic API Version Selection (NEW!) # Single server supports both v1 and v2 - just change this variable! AIRFLOW_API_VERSION=v1 # v1 for Airflow 2.x, v2 for Airflow 3.0+ AIRFLOW_API_BASE_URL=http://localhost:8080/api # Test Cluster Connection Examples: # For Airflow 2.x test cluster (from Airflow-Docker-Compose) AIRFLOW_API_VERSION=v1 AIRFLOW_API_BASE_URL=http://localhost:38080/api # For Airflow 3.x test cluster (from Airflow-Docker-Compose) AIRFLOW_API_VERSION=v2 AIRFLOW_API_BASE_URL=http://localhost:48080/api # Authentication AIRFLOW_API_USERNAME=airflow AIRFLOW_API_PASSWORD=airflow # Optional - MCP Server Configuration MCP_LOG_LEVEL=INFO # DEBUG/INFO/WARNING/ERROR/CRITICAL FASTMCP_TYPE=stdio # stdio/streamable-http FASTMCP_PORT=8000 # HTTP server port (Docker mode) # Bearer Token Authentication for streamable-http mode # Enable authentication (recommended for production) # Default: false (when undefined, empty, or null) # Values: true/false, 1/0, yes/no, on/off (case insensitive) REMOTE_AUTH_ENABLE=false # true/false REMOTE_SECRET_KEY=your-secure-secret-key-here

Official Documentation:

| Feature | API v1 (Airflow 2.x) | API v2 (Airflow 3.0+) |

|---|---|---|

| Total Tools | 43 tools | 45 tools |

| Shared Tools | 43 (100%) | 43 (96%) |

| Exclusive Tools | 0 | 2 (Asset Management) |

| Basic DAG Operations | ✅ | ✅ Enhanced |

| Task Management | ✅ | ✅ Enhanced |

| Connection Management | ✅ | ✅ Enhanced |

| Pool Management | ✅ | ✅ Enhanced |

| Asset Management | ❌ | ✅ New |

| Asset Events | ❌ | ✅ New |

| Data-Aware Scheduling | ❌ | ✅ New |

| Enhanced DAG Warnings | ❌ | ✅ New |

| Advanced Filtering | Basic | ✅ Enhanced |

For streamable-http mode, this MCP server supports Bearer token authentication to secure remote access. This is especially important when running the server in production environments.

Enable Authentication:

# In .env file REMOTE_AUTH_ENABLE=true REMOTE_SECRET_KEY=your-secure-secret-key-here

Or via CLI:

python -m mcp_airflow_api --type streamable-http --auth-enable --secret-key your-secure-secret-key-here

Note:

REMOTE_AUTH_ENABLEdefaults tofalsewhen undefined, empty, or null. Supported values aretrue/false,1/0,yes/no,on/off(case insensitive).

When authentication is enabled, MCP clients must include the Bearer token in the Authorization header:

{ "mcpServers": { "mcp-airflow-api": { "type": "streamable-http", "url": "http://your-server:8000/mcp", "headers": { "Authorization": "Bearer your-secure-secret-key-here" } } } }

When authentication fails, the server returns:

version: '3.8' services: mcp-server: build: context: . dockerfile: Dockerfile.MCP-Server environment: - FASTMCP_PORT=8000 - AIRFLOW_API_VERSION=v1 - AIRFLOW_API_BASE_URL=http://your-airflow:8080/api - AIRFLOW_API_USERNAME=airflow - AIRFLOW_API_PASSWORD=airflow

git clone https://github.com/call518/MCP-Airflow-API.git cd MCP-Airflow-API pip install -e . # Run in stdio mode python -m mcp_airflow_api

For testing and development, use our companion project Airflow-Docker-Compose which supports both Airflow 2.x and 3.x environments.

git clone https://github.com/call518/Airflow-Docker-Compose.git cd Airflow-Docker-Compose

For testing API v1 compatibility with stable production features:

# Navigate to Airflow 2.x environment cd airflow-2.x # (Optional) Customize environment variables cp .env.template .env # Edit .env file as needed # Deploy Airflow 2.x cluster ./run-airflow-cluster.sh # Access Web UI # URL: http://localhost:38080 # Username: airflow / Password: airflow

Environment details:

apache/airflow:2.10.238080 (configurable via AIRFLOW_WEBSERVER_PORT)/api/v1/* endpointsFor testing API v2 with latest features including Assets management:

# Navigate to Airflow 3.x environment cd airflow-3.x # (Optional) Customize environment variables cp .env.template .env # Edit .env file as needed # Deploy Airflow 3.x cluster ./run-airflow-cluster.sh # Access API Server # URL: http://localhost:48080 # Username: airflow / Password: airflow

Environment details:

apache/airflow:3.0.648080 (configurable via AIRFLOW_APISERVER_PORT)/api/v2/* endpoints + Assets managementFor comprehensive testing across different Airflow versions:

# Start Airflow 2.x (port 38080) cd airflow-2.x && ./run-airflow-cluster.sh # Start Airflow 3.x (port 48080) cd ../airflow-3.x && ./run-airflow-cluster.sh

| Feature | Airflow 2.x | Airflow 3.x |

|---|---|---|

| Authentication | Basic Auth | JWT Tokens (FabAuthManager) |

| Default Port | 38080 | 48080 |

| API Endpoints | /api/v1/* | /api/v2/* |

| Assets Support | ❌ Limited/Experimental | ✅ Full Support |

| Provider Packages | providers | distributions |

| Stability | ✅ Production Ready | 🧪 Beta/Development |

To stop and clean up the test environments:

# For Airflow 2.x cd airflow-2.x && ./cleanup-airflow-cluster.sh # For Airflow 3.x cd airflow-3.x && ./cleanup-airflow-cluster.sh

Repository: https://github.com/call518/MCP-Airflow-API

How to Contribute

Please consider starring the project if you find it useful.

MCP-Airflow-API changes the paradigm of data engineering and workflow management:

No need to memorize REST API calls — just ask in natural language:

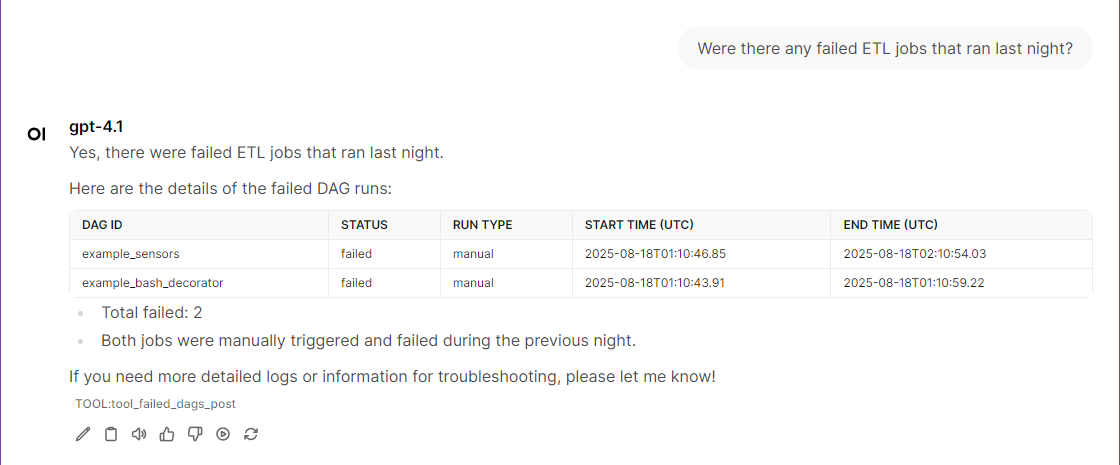

"Show me the status of currently running ETL jobs."

#Apache-Airflow #MCP #ModelContextProtocol #DataEngineering #DevOps #WorkflowAutomation #NaturalLanguage #OpenSource #Python #Docker #AI-Integration

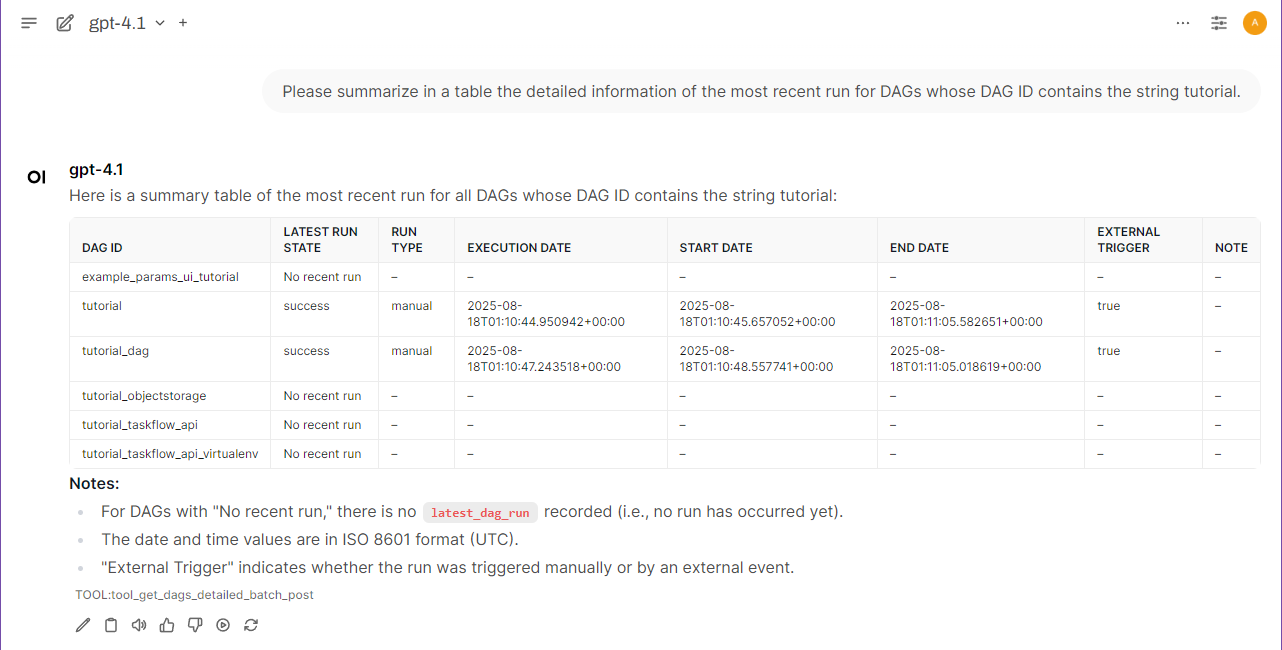

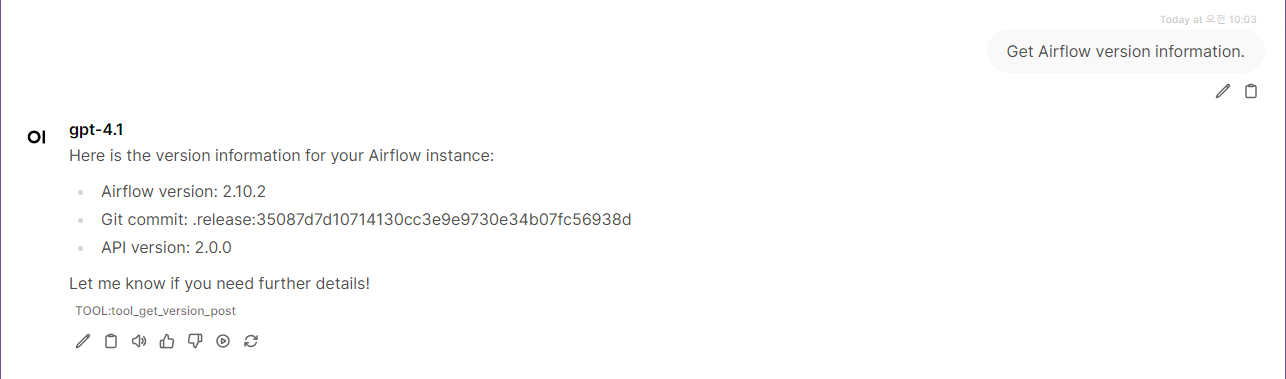

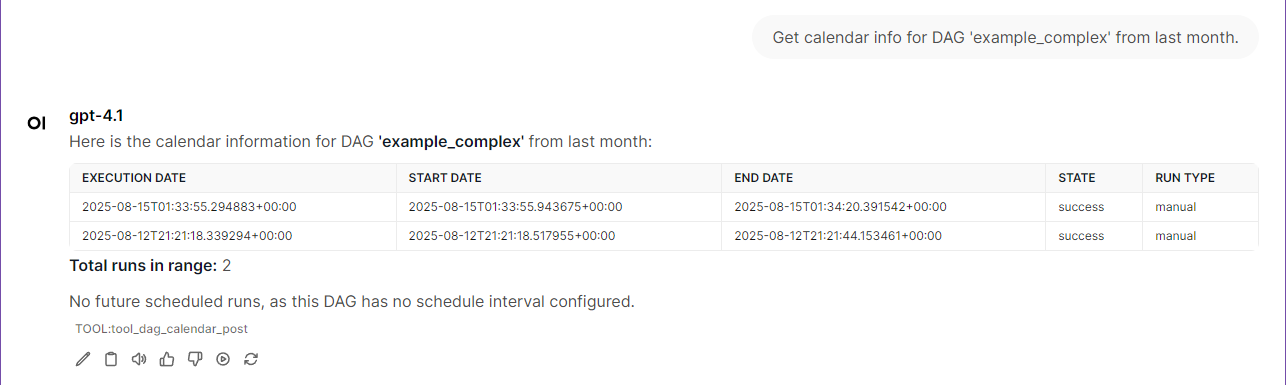

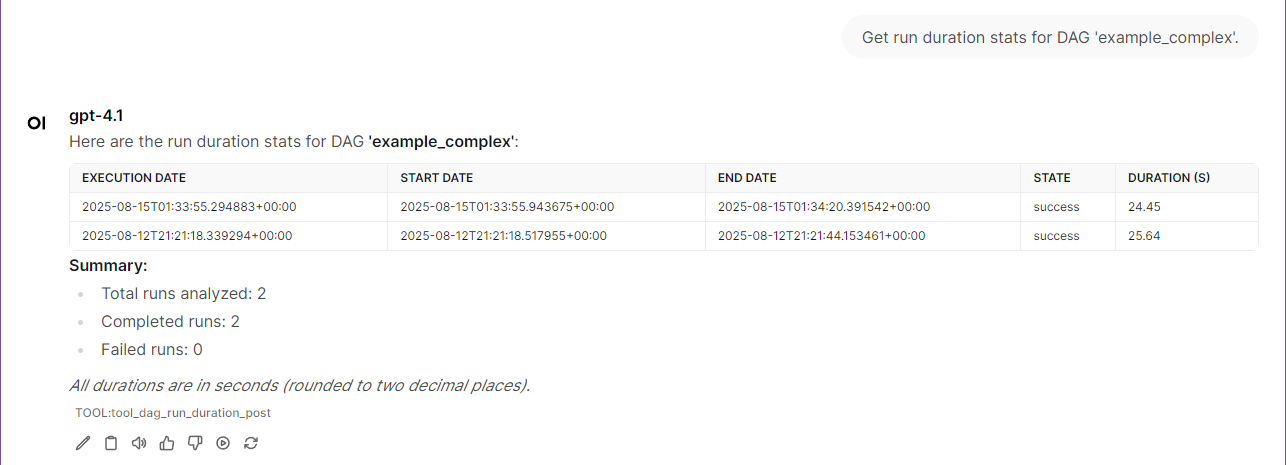

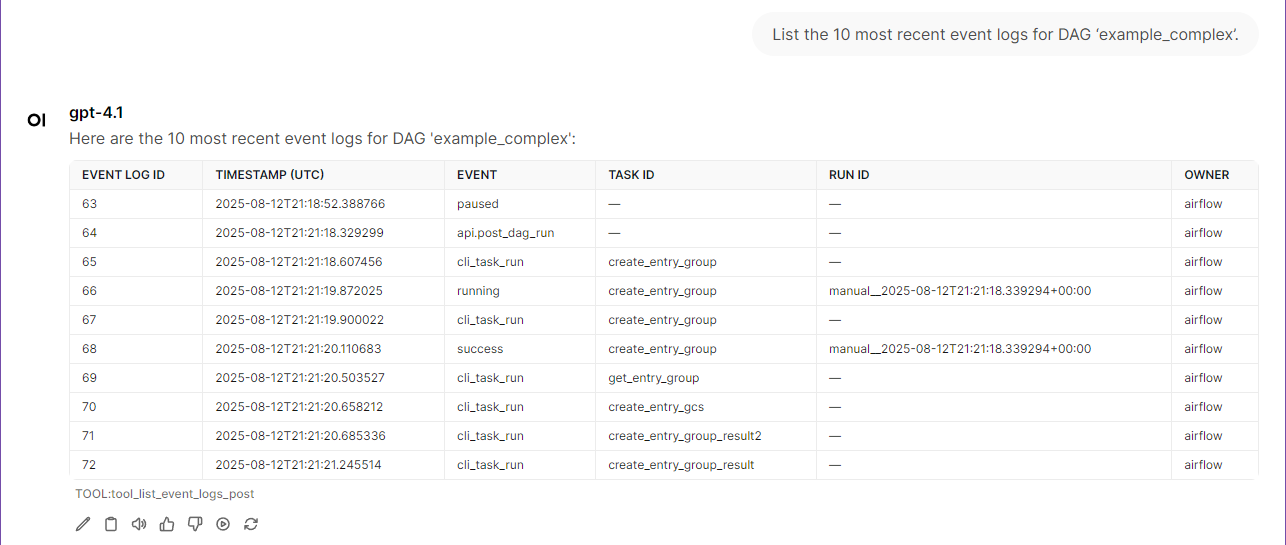

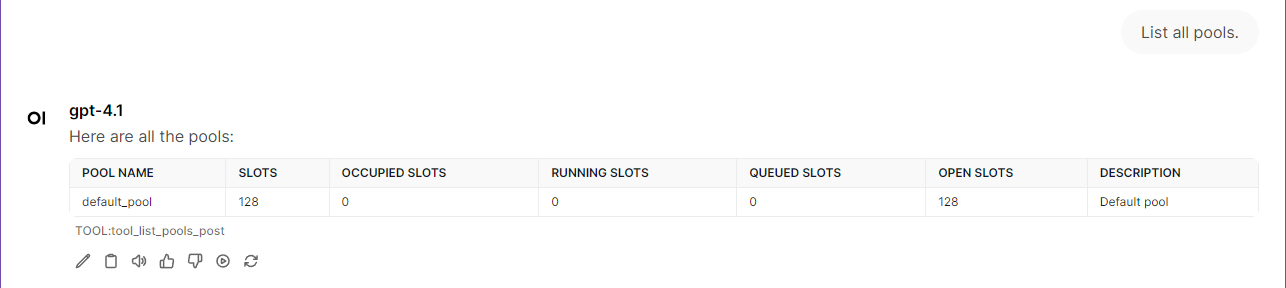

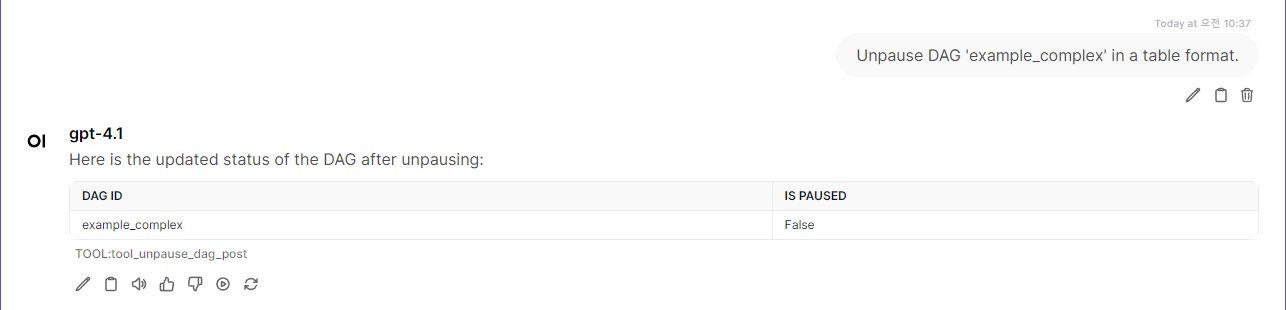

This section provides comprehensive examples of how to use MCP-Airflow-API tools with natural language queries.

list_dags(limit=20, offset=20)list_dags(id_contains="etl")list_dags(name_contains="daily")get_dags_detailed_batch(fetch_all=True)get_dags_detailed_batch(is_active=True, is_paused=False)get_dags_detailed_batch(id_contains="example", limit=50)get_config_section("core")get_config_section("webserver")search_config_options("database")search_config_options("timeout")Important: Configuration tools require expose_config = True in airflow.cfg [webserver] section. Even admin users get 403 errors if this is disabled.

get_dags_detailed_batch(fetch_all=True)get_dags_detailed_batch(is_active=True)get_dags_detailed_batch(id_contains="etl")Note: get_dags_detailed_batch returns each DAG with both configuration details (from get_dag()) and a latest_dag_run field containing the most recent execution information (run_id, state, execution_date, start_date, end_date, etc.).

Tools automatically base relative date calculations on the server's current date/time:

| User Input | Calculation Method | Example Format |

|---|---|---|

| "yesterday" | current_date - 1 day | YYYY-MM-DD (1 day before current) |

| "last week" | current_date - 7 days to current_date - 1 day | YYYY-MM-DD to YYYY-MM-DD (7 days range) |

| "last 3 days" | current_date - 3 days to current_date | YYYY-MM-DD to YYYY-MM-DD (3 days range) |

| "this morning" | current_date 00:00 to 12:00 | YYYY-MM-DDTHH:mm:ssZ format |

The server always uses its current date/time for these calculations.

Available only when AIRFLOW_API_VERSION=v2 (Airflow 3.0+):

list_assets(uri_pattern="s3://data-lake")list_asset_events(asset_uri="s3://bucket/file.csv")list_asset_events(source_dag_id="etl_pipeline")Data-Aware Scheduling Examples:

🤝 Got ideas? Found bugs? Want to add cool features?

We're always excited to welcome new contributors! Whether you're fixing a typo, adding a new monitoring tool, or improving documentation - every contribution makes this project better.

Ways to contribute:

Pro tip: The codebase is designed to be super friendly for adding new tools. Check out the existing @mcp.tool() functions in airflow_api.py.

This MCP server is designed for easy extensibility. After you have explored the main features and Quickstart, you can add your own custom tools as follows:

Add reusable data functions to src/mcp_airflow_api/functions.py:

async def get_your_custom_data(target_resource: str = None) -> List[Dict[str, Any]]: """Your custom data retrieval function.""" # Example implementation - adapt to your service data_source = await get_data_connection(target_resource) results = await fetch_data_from_source( source=data_source, filters=your_conditions, aggregations=["count", "sum", "avg"], sorting=["count DESC", "timestamp ASC"] ) return results

Add your tool function to src/mcp_airflow_api/airflow_api.py:

@mcp.tool() async def get_your_custom_analysis(limit: int = 50, target_name: Optional[str] = None) -> str: """ [Tool Purpose]: Brief description of what your tool does [Exact Functionality]: - Feature 1: Data aggregation and analysis - Feature 2: Resource monitoring and insights - Feature 3: Performance metrics and reporting [Required Use Cases]: - When user asks "your specific analysis request" - Your business-specific monitoring needs Args: limit: Maximum results (1-100) target_name: Target resource/service name Returns: Formatted analysis results """ try: limit = max(1, min(limit, 100)) # Always validate input results = await get_your_custom_data(target_resource=target_name) if results: results = results[:limit] return format_table_data(results, f"Custom Analysis (Top {len(results)})") except Exception as e: logger.error(f"Failed to get custom analysis: {e}") return f"Error: {str(e)}"

Add your helper function to imports in src/mcp_airflow_api/airflow_api.py:

from .functions import ( # ...existing imports... get_your_custom_data, # Add your new function )

Add your tool description to src/mcp_airflow_api/prompt_template.md for better natural language recognition:

### **Your Custom Analysis Tool** ### X. **get_your_custom_analysis** **Purpose**: Brief description of what your tool does **Usage**: "Show me your custom analysis" or "Get custom analysis for database_name" **Features**: Data aggregation, resource monitoring, performance metrics **Required**: `target_name` parameter for specific resource analysis

# Local testing ./scripts/run-mcp-inspector-local.sh # Or with Docker docker-compose up -d docker-compose logs -f mcp-server # Test with natural language: # "Show me your custom analysis" # "Get custom analysis for target_name"

That's it! Your custom tool is ready to use with natural language queries.

Freely use, modify, and distribute under the MIT License.